Back to selection

Back to selection

Audio Watermarks for the Second Screen

Buzz about the second screen is becoming increasingly ubiquitous, as this recent (and, for me, incredibly thought-provoking) Filmmaker article by Scott Macaulay shows. Coordinating content on two devices to play (in any sense of that word) simultaneously, which some have called “orchestrated media,” is one of the next big things, and it’s causing content creators to search for new ways to make their dual-screen programs stand out. First, as that article points out, it requires new mental paradigms for filmmakers/digital storytellers as they plan and execute their work. But second, and just as important, it requires the technological means to execute that vision.

Hence I was quite interested when I recently heard about audio watermarks. The concept, which actually falls under the broader rubric of echo modulation (that is, the creation of false echoes, explained in more technical detail here), has been around for years, particularly since the iPhone upended the smartphone industry, and on the surface it’s rather simple: just like binary computer-programming code compresses incredible amounts of information into very small bytes, technology like audio watermarks compresses packets of information inside audio signals. These audio signals, that are included in the soundtrack playing on one screen, for instance, are inaudible to human and even animal ears but are picked up by microphones on handheld devices, the second screen. When these are running an app containing the appropriate decoding software, the device reacts to–or interacts with–the first screen with coordinated media, which could mean anything from video to games, text, social media, links to websites (i.e. advertisements), or anything else. Think of it like a cooler and more up-to-date QR code that users never even know is there.

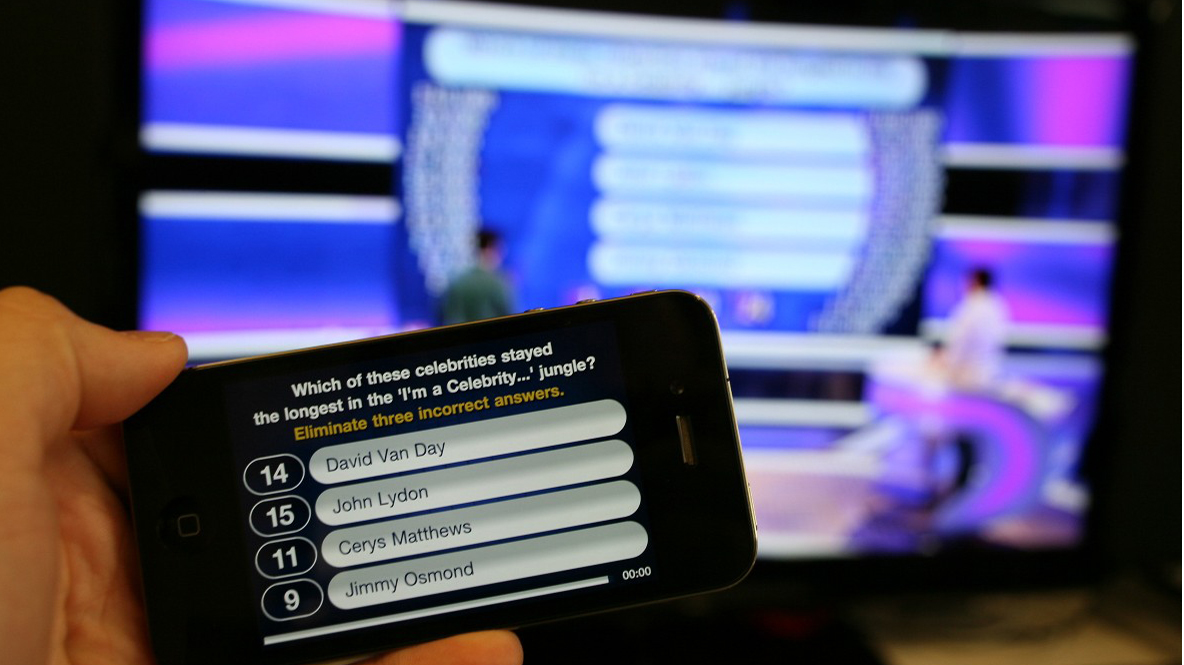

And in recent years one of the most promising firms to develop audio watermark software is Intrasonics in Cambridge, UK. I spoke with Dr. Michael Woodley of the company’s business development department, who said that Intrasonics began in 2000 as a group of researchers at a technology incubator; by 2007 they were patenting all kinds of technology for interactive media. This was right when smartphones were getting really smart, with the three necessary elements of “a microphone, a processor and a screen.” Intrasonics began releasing its work, which quickly turned up in programs like the BBC’s national lottery television show Secret Fortune (pictured above and discussed here). With new owners in 2007/8, Intrasonics now seeks to “develop and package the technology for a range of markets from broadcast audience research to synchronised companion apps for TV and even to interactive toys.”

Companies like Intrasonics don’t develop or release actual apps themselves. Rather, Intrasonics creates a Software Development Kit (SDK) that actual content producers — broadcasters, production companies, and app developers — can incorporate into their work. All types of echo modulation codes, which Woodley describes like unique serial numbers, are licensed to customers. Once they have the licenses they need, content producers do the work in two coordinated parts: with programmers and video editors inserting the code or watermark into their timelines in Final Cut or Avid, and coders enabling their apps to listen for the signals when the video is played. And while Intrasonics specializes in audio watermarks, Woodley points out that it’s not the only way to imperceptibly encode information in video, with audio fingerprints being the other main method. Each has its pros and cons, but he claims that “one thing we know for certain is that audio watermarking is the only way to establish a perfect time sync between first and second screen content, and it is the only method possible when there is no guarantee of a network data connection for the second screen device, such as in cinemas.” It also allows for viewers to use both screens whether they’re watching during a live broadcast or off their DVR a week later.

So how has it worked so far? The case studies from Intrasonics alone, let alone their competitors, is impressive and already quite broad in terms of genre and style. The design question of how exactly two (or more) screens will interact reemerges, and browsing through finished productions indicates that most audio watermarks are used in television programs to interact with smartphones and pads; perhaps the most obvious way to do this is with nonfiction programming: quiz shows, reality programming, etc. Hence Intrasonic’s clients include the BBC’s Antiques Roadshow, the Belgium show Fastest Quiz In the World, the Canadian gameshow Instant Cash, and the Spanish talkshow Ant Hill. It has moved into more narrative programming, though, like Channel 4’s comedy series Facejacker (a fiction-reality mix), where comedic bonus material is unlocked on a viewer’s device as each episode progresses. Advertising potential is ripe, of course, like Ben & Jerry’s use of the technology to run free ice cream quizzes before films start in cinemas.

Indie filmmakers, of course, don’t always broadcast their work on national television or contract with Ben & Jerry’s, and Woodley points to new directions that audio watermarks are already taking the second screen for smaller-scale producers: “We see applications with radio station companion apps, apps that interact with YouTube channels, and apps that reward people at music festivals for checking into band sessions. With feature film and documentary film, we are only just scratching the surface of what is possible, but a simple example might be in the area of product placement and apps that are triggered by watermark codes to offer DVD and Netflix viewers discounts and direct purchases over mobile of certain products that appear in a movie. Another might be in the delivery of deeper multimedia content to accompany a documentary, but great care must be taken not to confuse the viewer about which screen you want them looking at.”

This last point was raised by Jason Brush in his conversation with Scott Macaulay at SXSW, cited above. Second screens often feel like they’re pulling viewers away from “the big thing, the thing on the TV” (Woodley says that when a viewer is enmeshed in Twitter the television might as well be a radio). Michel Reilhac countered that the hierarchy between screens may only be one of size, not necessarily of importance: the second, smaller screen “actually becomes more resourceful and challenging because of its interactivity. Thinking of fiction and focusing on the more interactive second screen, as opposed to the bigger, primary screen, is a very interesting challenge.”

And echo modulation, audio watermarks, and audio fingerprints are all ways that can now help filmmakers in doing just that.