Back to selection

Back to selection

Extra Curricular

by Holly Willis

Teaching Immersion and Stereoscopy

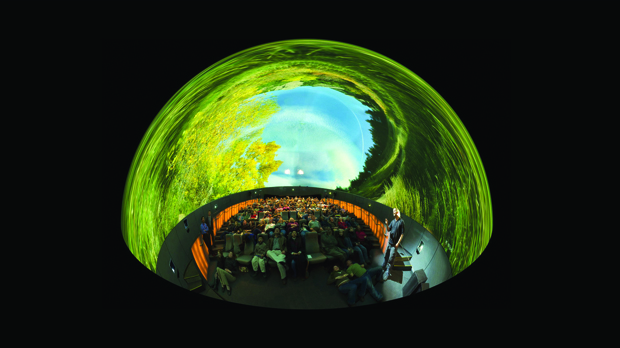

Eric Hanson's class at the Vortex Dome

Eric Hanson's class at the Vortex Dome The profusion of virtual reality projects showcased at the 2015 Sundance Film Festival is a testament to the fact that the tools and techniques for cinematic storytelling are expanding. Film schools are adapting, often quickly creating new courses that attempt to help students navigate this new frontier. My colleague Eric Hanson, for example, now teaches a course in University of Southern California’s School of Cinematic Arts called “Experiments in Immersive Design.” The course was originally designed to help students understand the history, theory and practice of three-dimensional filmmaking. But under Hanson it has shifted more to incorporate his background and interests. A professor in the school’s animation division, Hanson is a visual effects artist with expertise in lighting, rendering and compositing. He’s also a partner, with Greg Downing, in xRez Studio, a small shop located in Santa Monica, Calif., dedicated to exploring super high-resolution imaging techniques. The pair’s credits include effects work on The Fifth Element, The Chronicles of Narnia and Spider-Man 3.

Hanson begins his class with a two-week introduction to stereoscopy, and then divides the rest of the semester into three sections. The first section explores the IMAX format; the second section investigates full-dome filmmaking; and the third section is devoted to virtual reality. Hanson’s approach is hybrid: students consider the kinds of experiences and stories that might work best for each format, as well as the histories, tools and workflows that make sense. Hanson says that the class functions more effectively now because so many of the tools for creating this kind of work are much more accessible, and the workflows, while still somewhat daunting, are becoming more manageable.

In the course’s two-week introduction, students learn the nuts and bolts of stereoscopy, with attention to some terms that are pretty foreign to most film students, such as “interocular distance” (the distance between your eyes) and “zero parallax plane” (the plane of projection in 3-D). While the focus on these very technical ideas may seem off-putting, Hanson’s approach is playful, inventive and decidedly DIY. Students are encouraged to explore, hack together tools and workflows and have fun.

For the IMAX section of the class, students investigate the differences between traditional cinema screens and giant screens; they look at the tools that are appropriate for giant screen and the differences between cinematic cinematography and giant screen images.

In the full-dome section of the class, the students study the AlloSphere at the California NanoSystems Institute at the University of California-Santa Barbara. The AlloSphere is a 30-foot sphere within a three-story echo-free cube used for creating immersive experiences of many different forms, from a walk through the inside of a brain to large-scale data visualizations. What is the language of cinema for a space like the AlloSphere? How would you tell a story within this space? What kinds of stories or experiences might be most powerful?

The students also visit Ed Lantz’s Vortex Dome in downtown Los Angeles. Lantz is the CEO of Vortex Immersion Media, Inc., which is based at L.A. Center Studios, and is working avidly to explore the cinematic potentials of full-dome projection. Hanson devotes time to the principles of immersive cinema and, again, to the kinds of stories that might be possible in the full-dome experience.

For the final section of the class, students experiment with methods for creating immersive cinema, whether with the new GoPro rig, a mirror ball or a fisheye lens.

One of the techniques for this kind of high-resolution filmmaking is photogrammetry, which is defined as the science of making measurements with photographs. “For the class,” explains Hanson, “this means using DSLR cameras to take a series of still images that are correlated to make a mesh, which we then use to create a reconstruction.”

To get a better understanding of what Hanson is describing, imagine that you want to capture a beautiful ravine in Red Rock Canyon. You would walk along the ravine, taking a series of photographs at multiple levels — low level, eye-level and from above — with the camera attached to a pole. Having taken 500 images or so, you would then import them and create mapped points using the images’ geographic information system (GIS) data that could then be reconstructed into a high-resolution image. You could then “drape” a photograph over that terrain, or layer animated imagery over it. For example, you could fill the ravine with rippling water, or make the landscape into a dimensional backdrop for a story.

xRez Studio experimented with this process in a massive endeavor in 2008 called the Yosemite Extreme Panoramic Imaging Project. Ten thousand DSLR images were shot and then, using the GIS data, stitched together to create a giant panorama that used the digital elevation information (captured by shooting the images at multiple heights). “The result is a landscape photograph devoid of perspective — a first in landscape photography,” Hanson says.

One of Hanson’s most recent projects was Black Lake, the 10-minute music video for Björk directed by Andrew Thomas Huang. Hanson says he and his team flew to Iceland to capture shots of a landscape created by volcanoes, where they used a photogrammetry process with DSLRs, a MoVI stabilizer and scanners borrowed from Autodesk to make a terrain map that forms the foundation for the fragmented landscape of Björk’s body. Hanson also recently documented the June 2014 collaboration between Chinese dissident artist Ai Weiwei and Native American artist Bert Benally; the result is Pull of the Moon, a short film that uses aerial mapping achieved with drones, photogrammetry and gigapixel panoramic processes, and was designed specifically for a 50-foot dome theater in Santa Fe, New Mexico. Since then, xRez has created VR versions for viewing with the Oculus Rift.

Hanson is contagiously enthusiastic about his work, emphasizing how much easier these techniques are becoming. “This is the beginning of the great digitization of the world!” he says. “With my cellphone, I can now walk around a person, take a series of photographs, and using Autodesk’s 123D Catch, create a model of that person in less than five minutes.” He concludes, “It’s now very trivial to do this kind of thing.” Thanks to the fact that the tools are more accessible and the process more comprehensible to filmmakers attuned to traditional cinematography, Hanson’s students get to experiment, and, together with faculty, really explore the emerging visual and sensory languages of new forms of immersive storytelling.