Back to selection

Back to selection

Technology Tailwinds — Where Are They Taking Us?

The SOLO Cinebot

The SOLO Cinebot The following article was originally published in Filmmaker‘s Winter, 2021 edition.

Digital technologies, incessantly lurching forward, are the ground we filmmakers stand on. No wonder we’re often unsteady on our feet.

The pandemic has only become an accelerant. Although FaceTime and Skype were around awhile before both became verbs, it took the exigencies of the pandemic to flood our lives with Zoom calling, a digital convenience that has reshaped our relationship to proximity, travel and geography. Just ask Joe Biden. Doesn’t every production meeting now take place on Zoom?

Production practices with renewed COVID relevance include use of zooms instead of primes to cut down on lens changes, wireless focusing to maintain social distancing, wireless booming instead of touching the talent toW attach lavalier mics, wireless camera monitoring using multiple iPads to avoid huddling around a single monitor, even directing from a faraway location while using an iPad to keep tabs.

Indeed, if you’re hip to what “building a large LED volume” means, then you already know why you need a VAD (virtual art department). Either that, or you’ve watched every episode of The Mandalorian on Disney+ and have consumed all the digital ink about how its fantastical background sets were virtually designed and displayed on enormous LED screens, often slightly out-of-focus. Can enormous LED screens substitute for green screens or rear-screen projection in low-budget filmmaking? Despite WYSIWYG simplicity, these ironically seem to ratchet up complexity, requiring device profiling and system calibration (color-matching camera/lens choices to screen color and refresh rate), real-time camera and lens tracking, genlock for multiple cameras, workflows for on-set color management and more. This is supposed to save time and money? (For an emphatic “yes,” check out “Shooting on Location without Flying There” in Jon Fauer’s Film and Digital Times.)

So, given that we’re now accustomed to remote camera control in the form of PTZ heads (pan, tilt, zoom) and drones, why not remove the camera operator from the set altogether, one less source of infection? That’s the idea behind SOLO Cinebot, a shipping box that you receive, stand on end, then pop the top to reveal a ready-to-shoot, remote-control camera and LED ring light inside. Or why not a terrestrial version of a drone on four fat wheels, which can barrel over the roughest terrain or gently crab across an interior set with Steadicam-like results? Impressive action videos of the robotic AGITO Modular Dolly System can be found on YouTube, as can video demonstrating the aptly named SOLO Cinebot.

Drones are flying robots, and robotics have been seeping into production for some time. A good example of tech borrowed from micro-robotics is the in-body image stabilization (IBIS) featured in recent mirrorless cameras. To compensate for shake in a hand-held camera, the camera moves the entire sensor to counter the displacement of the image on the sensor due to shakiness. If done fast enough, the resulting video appears stable, as if from a Steadicam. Some camera sensors can correct for all five axes of stabilization—X-axis, Y-axis, roll, pitch, yaw—while others combine sensor stabilization with lenses that bring built-in optical stabilization. Whichever the case, whether further augmented by gimbal stabilization or not, the result can sometimes give the familiar Steadicam rig with operator a run for its money at a scintilla of the cost.

A current example of machine learning—AKA artificial intelligence or AI—is found in the advanced autofocusing in Sony Alpha mirrorless cameras. Based on phase-detection—in which a sensor instantaneously compares the output of photodiode pairs to establish a precise focus—Sony’s advanced algorithms simultaneously track the eyes, the shape of the head, the body, even patterns found in clothing. These inputs are combined to form a subject profile—human or animal—and the result is a smart autofocus that won’t jump from the subject to an object passing between the subject and the camera, because it knows not to. It will even stay focused on a face when it turns to profile, or when the back of a head is seen.

Don’t misunderstand: focus-pulling is an art, and good focus-pullers are artists. This is why smart autofocus poses no threat to their livelihoods. (Indeed, I’ve repeatedly asked several major video camera manufacturers to consider making available a read-out of their camera’s phase-detection distance data in order to assist manual focus-pulling. So far, no luck.) Are there not an infinite number of documentary situations in which smart autofocus would be a godsend, especially in our peak moment of large sensors, shallow focus and one-person crews?

Even small sensors benefit from a fleet, nimble autofocus capability. No more cutting-edge example exists than Apple’s addition of LiDAR to the iPhone. Phase-detection autofocus debuted in iPhone 6—iPhone sensors are made by Sony—but augmented reality (AR) requires the spatial smarts of LiDAR, which Apple just introduced in its latest iPhone 12 Pro, Pro Max and iPad Pro. What is LiDAR? Mash together the words light and radar and you’ll have a general idea. Most LiDAR systems feature a spinning device like a radar dish that sweeps an array of invisible laser beams around and around. The lasers bounce off objects in the environment, and the LiDAR system measures how long it takes for the light beams to return. In this way, LiDAR builds and continually refreshes a 360º wireframe map of nearby objects, somewhat like a drawing of a 3-D elevation but in the round, captured in real time.

For what it’s worth, bulky, obscenely expensive LiDAR systems like the one pictured here sit atop all prototype driverless vehicles (with the exception of Tesla)—one of the obstacles to the adoption of such vehicles. Apple instead uses a state-of-the-art, miniaturized, solid-state LiDAR with no moving parts. Located in the iPhone’s camera bump, it scans what’s in front of the iPhone up to fifteen feet away, many times the maximum range of the infrared lasers in the existing Face ID TrueDepth camera. (Solid-state LiDARs like this will eventually be featured in cars, too.)

What can be done with real-time 3-D mapping of the immediate environment? As is happens, Apple has already incorporated LiDAR data into its autofocus algorithms, and there’s no reason to think this approach won’t appear in other cameras and devices soon. Existing, expensive focus-tracking systems using infrared, laser or ultrasound—Preston Light Ranger 2, Wards Sniper MK 3, ARRI Ultrasonic Distance Measure—might someday be eclipsed by inexpensive, commoditized LiDAR focusing kits from the likes of Amazon, with finger-tracking control of focus using your iPad. Why not?

As to those who dismiss autofocus as strictly for amateurs: I’m a professional DP. On occasion I use autofocus, therefore my use of autofocus is professional. Case closed.

The topic of what’s new in cameras themselves, where they’re heading, is too broad to tackle here, but there are two extant trends worth noting: camera bodies are shrinking and sensors are expanding. Examples of ultra-compact digital cinema cameras with full-frame sensors include the Sony FX6, Canon EOS C500 Mark II, and RED DSMC2 with 8K Monstro sensor. Small bodies, or course, are ideal for drones and gimbals. This holds truer for even smaller bodies.

It’s worth remembering that the first camera to capture HD video with a full-frame sensor was a DSLR, the Canon EOS 5D Mark II, introduced a dozen years ago.

The descendants of DSLRs, the latest full-frame mirrorless cameras, are marvels of miniaturization and enhanced capabilities. Eliminating the internal mirror box reduced size, weight and complexity. The shorter flange-focal distance simplified lens design, resulting in sharper images from smaller, cheaper lenses. And while Sony pioneered the mirrorless full-frame camera, Canon, Nikon and Panasonic now boast 2nd generation mirrorless lines.

Their compactness, however, is also their Achilles’ heel. Canon’s EOS R5 internally records DCI 8K RAW up to 30fps, or 4:2:2 10-bit 4K up to 120fps—sort of amazing, but there’s a price to pay. The internal torrent of data needed to pull off this feat causes the R5 to overheat often and shut down. The petite chassis of a mirrorless camera, it turns out, makes an inefficient heat sink compared to an Alexa or Venice, nor is there much room for a cooling system. One mirrorless camera that has overcome this obstacle is Sony’s a7S III, designed primarily for video. With no limit on recording time, it is widely reported not to overheat despite lacking an internal fan. Internally it records 4:2:2 10-bit 4K up to 120fps and outputs 16-bit RAW from a full-size HDMI port. By the time you read this, Sony will likely have announced a new mirrorless camera akin to the a7S III that, like the Canon EOS R5, records 8K up to 30fps.

Do we really need 8K capture? It seems excessive, given that most movie theaters still show Hollywood blockbusters in 2K—a mere 1080 pixels in height like HD—yet no one complains about inadequate screen sharpness. But as those who routinely shoot in 4K have discovered, you can’t be too rich, too thin, or have too many pixels. With 4K or 8K original images, you can crop, reframe, stabilize, and zoom in, as you like, in post, without visibly sacrificing screen detail or amplifying noise. Cameras no longer need OLPF (optical low-pass filters) to suppress moiré. Moreover, with vastly more pixels—8K has 16 times more pixels than 2K—any video noise shrinks proportionately in size.

Best of all, even when the finished result is viewed in HD, the detail-rich original image shines through, conveying an impression of finer texture and subtlety. This is one motivation behind the recent introduction by Blackmagic Design of its URSA Mini Pro 12K camera. (Early adopters seem ecstatic with the results from this camera.) Similarly, a number of newer cameras also feature sensors with more than 4K pixels, whether or not they can actually record video larger than 4K, as can the URSA Mini Pro 12K with its choice of 4K, 8K and 12K. What these newer cameras share in common is the baseline function of recording a 10-bit 4K image derived from its larger oversampled image. For instance, while Sony’s FX9 and Panasonic’s S1H both incorporate full-frame 6K sensors, the FX9 can record only 4K from its oversampled 6K image, while the S1H can record either a 4K version or the full 6K version. (As of this writing, only two mirrorless cameras have been approved by Netflix: Panasonic’s S1H and Canon’s EOS C70, which is 4K Super 35.)

Here again, there’s a price to pay. An uncompressed 8K image with 16 times more pixels would require 16 times more media capacity to capture and store, a connector and pipeline with 16 times more throughput, a wireless signal with 16 times more bandwidth, and an editing/grading workstation with enough brawn to handle the whole megillah. This means CPUs and GPUs with more cores, or advanced RISC systems-on-a-chip like Apple’s new M1. Even when using the latest, most efficient codecs to compress and transport these oceans of data, only the latest model computers and buses and the largest, fastest SSDs and RAIDs will prove practical. (Which will engender additional budget line items.) So keep an eye out for discussions of the workflows and solutions arrived at by those intrepid trailblazers shooting with 6K cameras or the URSA Mini Pro 12K. They should prove enlightening.

While we’re on the intimidating subject of 8K and 12K, will new lenses be needed? Hardly. True, the advent of 4K caused film and video lens manufacturers to up their game, but consider this: while a full-frame 4K sensor might contain, at minimum, about ten megapixels, it’s typical for full-frame mirrorless cameras used by still photographers to feature four to six times as many pixels. Where resolution is concerned, to them we’re pikers. The good news is that not only are traditional film and broadcast lens manufacturers—Cooke, ARRI, Zeiss, Angénieux, Canon, Fujinon—producing their best-ever, most innovative lenses (and most expensive), but so are relative newcomers like Sony, perhaps the leader in servo-driven (as opposed to mechanical) lens design. Other cine stand-outs include Tokina, whose Vista primes are considered optically superb for their price point, Sigma, Tamron, and Samyang. Arguably the most intriguing development in the lens market is the entry of a wave of Chinese manufacturers, offering a host of conventional and unconventional designs, all “affordable.” These include Laowa, TTArtisan, 7ArtisanS, Venus Optics, Viltrox, Mitakon Zhongyi, Meike and several others. Keep an open mind about these, you might get surprised.

This is a good point at which to underscore the fact that camera and display resolutions are no longer inextricably linked. Just because 8K flat panels were all the rage at the recent virtual CES show doesn’t mean that you need to shoot 8K to produce programming for them. The point of an 8K camera is to capture an excess of pixels, the better to crop and push them around. The point of an 8K display, however, is different. Remember Apple’s original marketing of their Retina Display concept? The idea was to not see any pixels, even up close. Well, that’s what an 8K TV is. Super 8, 16mm, 35mm, HD, 4K, especially 8K will all look terrific on an 8K TV as long as they’re first mastered to HD, the lingua franca of virtually all TV broadcasting. For this reason, 8K TVs are all equipped with very respectable upresing. It’s what they do best and most often, since very little original programming exists in 8K.

As explained above, a supersampled image is a superior image: an 8K or 4K camera will produce a striking-looking HD deliverable. Again, this underscores the fact that no essential or fixed link exists between camera and display resolutions. A similar relationship pertains to camera and display when it comes to tonal scale reproduction. Back in the day, a color negative would compress the tonal scale and motion picture print film would stretch it back out. Likewise, using today’s digital cinema cameras, we capture and record 14-16 stops of dynamic range by means of log gammas or recording to RAW files, then grade the resulting footage in Rec. 709. When we do this, we clip a good deal of shadow and highlight detail, since a Rec. 709 display can only display five stops of dynamic range.

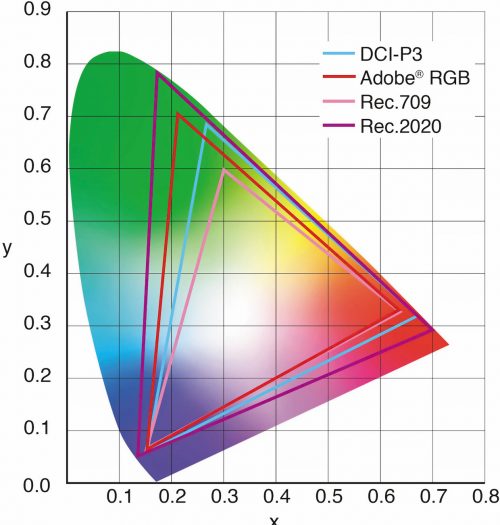

represents the total color gamut seen by the human eye Courtesy Eizo

As a TV display standard, Rec. 709 specifies a set of possible colors, called a gamut, which in the case of Rec. 709 is based on the original gamut of the NTSC standard of 1953. Things have changed a bit since then, so a replacement for Rec. 709, called Rec. 2020, for 4K and 8K TV, was introduced in 2012. Rec. 2020 defines a significantly larger gamut, one that can reproduce colors inaccessible to NTSC, like the red of a Coca-Cola can. In the diagram, you can see that Rec. 2020 comes closer to reproducing all the colors that the human eye can see. (It still falls considerably short.) In turn, the practical problem that Rec. 2020 creates for us is that only one display technology comes close to reproducing full Rec. 2020, and that’s a true RGB laser projector (not the lesser “RGB phosphor” type). RGB laser projection also offers also superior brightness and longevity, which is why, pre-pandemic, it was on the brink of wider adoption in movie theaters. Instead, the somewhat smaller color gamut standard seen in today’s digital theaters is called DCI-P3. Theater projectors are required to meet the P3 standard so that DCPs appear uniform from theater to theater. As a smaller, more attainable gamut, it also serves as the practical standard for most of those enormous flat panels at this year’s CES, whether 83” OLEDs, 88” MicroLEDs or 85” QLED MiniLEDs. Typically their specs boast something like “99% of P3.” Notably, in the past several years, most of Apple’s iMacs and MacBook Pros have also adopted P3.

In terms of High Dynamic Range (HDR), let’s simply say that regardless of HLG or Dolby Vision (which Apple

adopted in the iPhone 12), all displays, whether TVs or monitors or iPads, are trending toward greater maximum brightness, which any semblance of HDR will eventually require. OLEDs are handicapped in this regard, with a tendency towards burn-in. There are other extant technologies that sandwich a second all-white LED panel behind the color LCD screen in order to selectively juice the brighter areas of the image (which can elevate power draw and heat). Many expect the ultimate winner to be a self-emissive technology called microLED, presently used in large video walls and TVs over 100”, assembled from square modules. At every NAB since 2017, for instance, Sony has showcased an 8K microLED cinema screen 32 feet in length called CLED (Crystal LED). Ask anyone who saw it. It was stunning.

Compared to OLED—the gold standard for deep blacks—microLED uses three to four times less power, achieves a wider color gamut, a greater peak brightness, the same perfect blacks, and because it is based on inorganic semiconductors, is impervious to burn-in. It also boasts a longer lifetime. What’s not to like? The challenge is to miniaturize microLED and improve manufacturing yield and costs. Sony, Apple and everyone else is working feverishly to accomplish this ASAP. Meanwhile, large, inexpensive, conventional 4K and even 8K LCD TVs proliferate, with China poised to dominate this end of the market within a year or two. Might prove a boon to your virtual art department!

Another tectonic shift that is affecting everyone is virtualization, the conversion to IT (information technology) of nearly all postproduction services and broadcast studio gear, with the exception of control panels and displays, as well as services. Exhibit A is DaVinci Resolve 17, which is nothing less than a full postproduction studio on the desktop—media management, editing, online collaboration, VFX, grading, color management, audio mixing, finishing, output—currently in extensive use around the world. (95% of Resolve is available as a free download from Blackmagic Design.) Exhibit B, which demonstrates the sheer reach of digital manipulation, is CineMatch, a plug-in for Premiere, Resolve and (soon) FCPX that compares colorimetric profiles of camera sensors so that, with a single click, you can match log files from disparate cameras after a multi-camera shoot. (So much for the distinctive “look” of each camera.) Exhibit C is SMPTE 2110, a new suite of international digital standards for sending synchronized audio/video/data media over an IP network. Adiós SDI cables! Hello off-the-shelf computer peripherals and drives!

In this brief overview I’ve skipped developments in immersive media, namely AR, VR and 3D projection, in favor of good, old-fashioned 2D movie viewing. Yet after 125 years of collective 2D viewing, we must accept a fait accompli: the ubiquity of compact, even tiny, screens. I recently re-watched Chaplin’s City Lights on a small screen, and the famous final shot didn’t register emotionally as it always has. Classic cinema drew upon scale, long taken for granted, as a source of expressive power. Future filmmakers may no longer be able to count on this elemental resource.

It’s also uncertain that future filmmakers will ever know a silver screen. As anyone paying attention knows, movie theaters, independent and chain, seem caught in a death spiral. Under early pandemic pressure, Universal abbreviated its theatrical window, then in August a federal judge vacated the antitrust Paramount Consent Decrees which since 1948 have barred studios from owning and dominating theater chains. Could the timing of this decision possibly be more anticlimactic? As the pandemic shuttered theaters worldwide, Regal filed Chapter 11 and AT&T’s WarnerMedia eliminated theatrical windows for its entire 2021 slate, opting for simultaneous theatrical and HBO Max premieres. Enter Netflix and the rest. We still don’t know how this pans out in the end, but watching films in the future will probably not resemble watching films in the past, the way joining film in a hot splicer with film cement bears scant relationship to an editing keystroke.

Regarding the latest technology choices to “future-proof” your work, a source of considerable anxiety for some: no one in the distant future will ever complain that Grey Gardens is boxy and grainy. No one will care whether your film originated in HD or 4K. Making work that is compelling and thereby worth preserving is the best way to increase the odds that someone in the future will endeavor to preserve it. As several successful features shot on iPhones demonstrate, technology doesn’t make good films, good filmmakers do.