Back to selection

Back to selection

Progress in Small Steps: Camera and Lighting Technology Trends in 2023

Filming Gareth Edward's The Creator in Thailand with Sony FX3. ©Disney

Filming Gareth Edward's The Creator in Thailand with Sony FX3. ©Disney Commercial theatrical projection for most folks is an afterthought. A DCP gets loaded into a playout server, sound levels checked, curtains adjusted, and everything is good to go. This testifies to the efficacy of the two-decade old DCP (Digital Cinema Package) container format.

However, DCP adoption was not always smooth sailing. In 2009, a necessary DCP revamp complicated the industry’s transition away from 35mm. The original “Interop” specification, which had only been provisional, was superseded by a set of standardized specifications from the Society of Motion Picture and Television Engineers (SMPTE). Interop supported only one frame rate, 24 fps, but the new SMPTE DCP standard added frame rates of 25 and 30 for both 2K and 4K DCPs, as well as 48, 50, and 60 for 2K DCPs. (Note: in authoring SMPTE DCPs, 23.98 and 29.97 fps are always rounded up to 24 and 30.)

Unfortunately, eight years of expensive Interop DCP servers had been sold before the new SMPTE standards arrived, and these remained in service years afterwards. They could not play the new SMPTE DCPs or the new frame rates. By design however, the newer SMPTE DCP servers could play both Interop DCPs and SMPTE DCPs. This confusing situation—two types of DCP servers in use at the same time—fed a common belief that, where film festivals were concerned, to be on the safe side, DCPs should be always be Interop. Which, as a consequence, meant films must be 24 fps.

For North American documentaries filmed at 29.97 fps, this was tough cookies. It meant frame-rate conversions to 24 fps at post houses—costly, and the source of unsightly motion judder. For documentaries shot in Europe and around the world at 25 fps, everything got 4% slower, played back from Interop.

Film festivals began transitioning to DCPs starting around 2013, i.e., well after 2009. SMPTE DCP servers by that time were in wide use. From the outset, it’s unlikely that frame rates of 25 and 30 presented any technical issues at most festivals, at least on an individual film basis. (Projectors are happy to project any frame rate.) So why the continued fixation on Interop?

Some think cinema should only ever be 24 fps, but that’s an article of faith. Peruse the internet for advice to indie filmmakers on making DCPs for festivals, and you’ll be told in no uncertain terms to go Interop. Recently I encountered this very advice in person during a grading session at a Brooklyn post house. I might have replied that virtually all North America DCP servers are SMPTE, ditto Western Europe, with Latin America and Asia not far behind. Or that SMPTE DCPs provide not only frame-rate choice, they enhance audio choices and facilitate multiple-language subtitles including closed captions. They also support HDR and immersive audio like Dolby Atmos. I might have added that, over the years, I’ve sent various SMPTE DCPs at 30 fps to small European festivals and experienced zero problems.

Out of professional tact, I let it slide. But the good news is that in 2023, despite residual confusion and outdated internet advice, the real world seems to be rallying around SMPTE DCPs. More than one exhibitor and a festival projection manager told me this year they now warn people away from the deprecated Interop standard. The Berlinale recommends SMPTE over Interop. Sundance say that “DCPs may be Interop or SMPTE at 24, 25, 30 fps.” Progress at a snail’s pace, but I’ll take it.

2023 was also a tipping point for 4K DCPs. Most theatrical digital projectors since the advent of digital cinema have been 2K, functionally equivalent to HD with 1080 vertical pixels. While this remains the case, 4K projector adoption is gaining.

So why make a 4K DCP when most projection remains 2K? One answer is that it doesn’t cost a penny more to make a 4K DCP than a 2K, assuming your source material is 4K. Another answer is that even if a theatrical projector is only 2K, its DCP server will automatically scale down a 4K DCP to 2K—DCP’s codec, JPEG 2000, is scalable, one reason it was chosen for DCPs—which will look great on the big screen. So, why aren’t all DCPs 4K, at least for future-proofing? Indeed, as of 2023, 4K has suddenly become the new norm. Most if not all DCPs shown at the 2023 New York Film Festival in October were 4K. Recently speaking to a Sony Pictures Classics executive, I heard the same thing: all of their latest DCPs are now 4K. Netflix requires 4K DCPs.

If your DCP is 2K, though, no worries! Most of the digital cinema you’ve seen for 20 years has been 2K, and it’s always looked terrific. Resolution isn’t everything, anyway—ask any experienced director of photography. For 4K projection, a DCP server simply scales up a 2K DCP. It will look the same, only with finer onscreen pixels. In fact, the projection room may thank you, since it takes somewhat less time to load a 2K DCP on their servers than a 4K, and they can fit maybe twice as many 2Ks on the same RAID.

There’s more welcome news on the theatrical exhibition front: Adoption of RGB laser projection is rising. Partly because, while RGB laser projectors are expensive up front, they are more efficient in the long run—no costly xenon bulbs to maintain and replace—and partly because superior RGB laser projection harmonizes with current efforts to upsell movie-going as a premium experience: to wit, Dolby Atmos, reclining plush seats, food and drink service. To a viewer, RGB laser projection is no brighter—DCI standard screen brightness must be 14 foot-Lamberts (+/- 3 fL)—but colors do appear richer, with noticeably more snap. This is because, to maximize color gamut size, an additive color system must minimize the width of each of its light primaries on the electromagnetic spectrum. The red, green and blue diode lasers of an RGB laser projector, each being single wavelength, do this to within a nanometer. No current digital video monitor comes close to the full color gamut that RGB lasers produce, which alone achieves HDR’s Rec. 2020 gamut.

A couple of months before 2023’s New York Film Festival began, projectors in Lincoln Center’s Walter Reade Theater and Alice Tully Hall were upgraded to 4K RGB laser. Next time you’re in either of these theaters and the big red Netflix “N” logo appears onscreen, pay special attention to the rainbow of hues that expands to fill the screen as the N bursts into vertical stripes. The colors will appear remarkably deep and rich.

(By the way, if you weren’t convinced by the flawless, breathtaking 3D of last year’s Avatar: The Way of Water, do yourself a favor and see Wim Wenders’ upcoming Anselm, a documentary in 3D about the work and spirit of provocative German artist Anselm Kiefer. It’s a work of genius about genius, a tour de force of cinema and Wenders at his most profound. The superb 3D of both films is due to Dolby 3D, a collaboration between Dolby and RGB laser projector manufacturer Christie. It’s worth highlighting that only RGB laser projectors possess the output to make 3D appear as bright and colorful as conventional 2D, or for that matter to project 2D HDR on the big screen, which in the future we’re destined to see a lot.)

Monitors

Magic wand in hand, the mastering monitor I would conjure for my Resolve Workstation is the just-introduced Flanders Scientific XMP550 QD-OLED, the best-looking SDR and HDR monitor I saw in 2023. What’s not to envy? A state-of-the-art Quantum Dot OLED panel, 55” diagonal, UHD resolution, 2,000 nits peak brightness, 4,000,000:1 contrast, a wider QD-OLED color gamut and what Flanders calls Auto Calibration. Plug in a probe and the monitor does the rest, no computer or app needed. If only I had $20,000 burning a hole in my pocket!

It’s no state secret that Samsung Display makes all large QD-OLED panels on the market, including this one used by Flanders and Sony in its high-end consumer Bravia line. (The 2023 Nobel Prize in Chemistry was awarded to three American scientists “for the discovery and synthesis of quantum dots,” the nanocrystals that emit the pure red and green monochromatic light in Samsung’s QD-OLED displays.) In time, with luck, affordable QD-OLED mastering monitors for grading SDR and HDR could arrive from other manufacturers too, capitalizing on QD-OLED’s huge color gamut (90% of Rec. 2020). OLEDs, it would seem, are on very much on top now. Inexpensive consumer OLED TVs, for instance, are currently used by Hollywood studios as client displays. However all OLED displays, including QD-OLED, have an Achilles heel: burn-in.

That’s why Apple has endeavored for years to develop MicroLED displays for its products, an effort that is on-going. Totally impervious to burn-in, MicroLED panels are super-thin, power-efficient, and self-emissive like OLED. Each HDR-bright MicroLED pixel is its own light source, which means that MicroLED panels produce true blacks when these pixels switch off, exactly like OLEDs do. If this sounds like MicroLED is the holy grail of displays, well, that’s what Apple thinks too. The only hitch is, MicroLEDs, despite their name, are exceedingly hard to manufacture at the micro scale needed for an Apple Watch. This is because MicroLED happens to be the display technology behind those enormous video billboards that bring 24-hour daylight to Times Square, Shibuya Crossing, and the Vegas Strip. However, at ProArt’s NAB booth in April 2023 I saw a relatively small but breathtakingly spectacular 135” MicroLED HDR display with 2,000 nits of brightness. It actually stopped me in my tracks. So did its $200,000 price tag.

You may have noticed colorists on the west coast using Apple iPads for field playback of color grades. iPads and iPhones have earned a reputation for uniform color accuracy and consistency over time. Wouldn’t these ubiquitous devices make terrific lightweight location video monitors, better than any camera’s built-in LCD finder? That’s what a Chinese company called Accsoon thought, and they have introduced a new product called SeeMo. It’s a small adapter run on a Sony L-series–type battery that takes the HDMI output of your camera and converts it to a proprietary codec that SeeMo then feeds via Lighting Connector or USB-C to your iPhone or iPad. A SeeMo app on your Apple device displays Accsoon’s codec, turning your iOS device into a Ninja or Shogun equivalent, with colored peaking, focus enlargement, false color, zebras, waveforms, histograms and 8-bit H.264 recording. I’ve used a SeeMo since March 2023 on my secondary interview camera, and it’s been a marvel, breathing new purpose into the still-gorgeous OLED display of my old iPhone X. I couldn’t buy another onboard monitor as slim or as sophisticated if I wanted to. Last time I checked, SeeMo’s street price was less than $150.

Cameras and Lenses

As long as we’re on the topic of Apple products: The new Apple iPhone 15 Pro, like the 13 Pro and 14 Pro before it, supports internal recording of 4K ProRes in both SDR and HDR. The newsflash is that the 15 Pro introduces a new Apple Log profile for extended tonal scale capture. It also introduces recording of video to external storage, such as SSDs, via its new USB-C connection, which replaces Apple’s customary Lightning Connector.

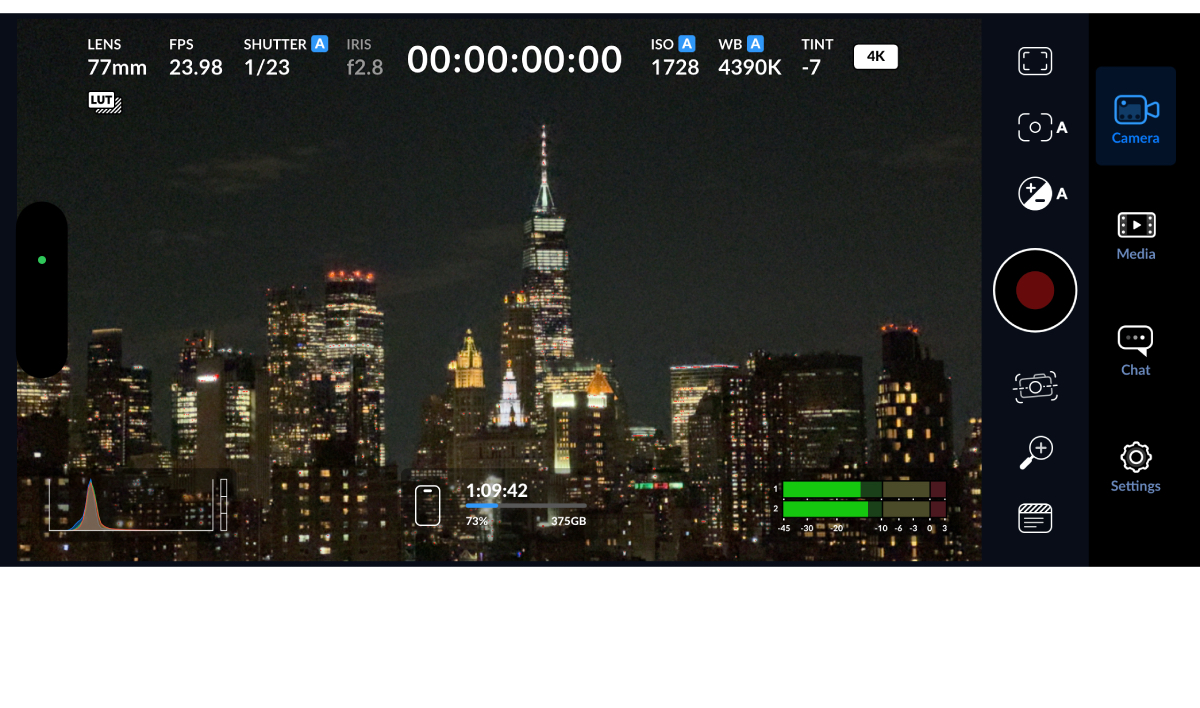

If your goal is to shoot 4K video with your iPhone 15 Pro—popular with young filmmakers and vloggers—you’ll find few useful controls in the settings of Apple’s camera app. Filmmakers for some time have instead relied on the excellent Filmic Pro app to transform their iPhone Pros into functional video cameras. I paid $4.99 for Filmic Pro back in 2014, but in 2022 Filmic Pro switched to a subscription model of $49.99/year. Enter Blackmagic Design, which last September at IBC in Amsterdam introduced a free iOS app called Blackmagic Camera that turns your iPhone 15 into a… Blackmagic camera! Yes, with a complete menu of pro settings in that simplified menu layout characteristic of actual Blackmagic Design cameras.

Shooting 4K on my 15 Pro using the Blackmagic Camera app, I have a choice of H.264, H.265 and all four ProRes flavors, from 422 Proxy to 422 HQ. (I’m going with ProRes HQ!) For frame rates, I have a choice of 23.98 or 24, 29.97 or 30, all the way up to 60, or I can shoot 1080p at 120fps or 240 fps. Using Apple Log with a viewing LUT, I can scroll from 32 ISO up to 2500, adjust shutter angles and much more. Further, DaVinci Resolve 18.6

provides support for Apple Log in both Resolve Color Management and ACES, a first for smartphone video.

Now, no one with experience asserts that an iPhone is equal to, say, an Alexa 35 with Master Primes. An iPhone Pro’s three wee lenses and scaled-down sensors are impressive miracles of miniaturization, but it takes every trick in the book, including the sorcery of computational photography, to produce so much apparent quality, and there are limits. Cinematic mode, for instance, uses dynamic depth mapping to generate depth-of-field effects, but Cinematic mode isn’t available while shooting ProRes. Neither are slo-mo and time-lapse. Even an industry-leading 3-nanometer A17 Pro chip has its processing limits.

In October 2013, Sony introduced its first mirrorless cameras for still photography, which sparked a revolution that is still unfolding. Until then, SLRs and motion picture cameras relied on optical reflex viewfinders that incorporated a mirror box assembly or a spinning 45-degree mirror shutter, respectively. These mechanisms took up considerable space between the rear of the lens and the focal plane, a distance called “flange focal depth.” The flange focal depth, for instance, of a Canon EF mount lens is 44 mm and of an ARRI PL mount, 52 mm (about two inches). But what if you wanted to mount a 20 mm lens, one that at infinity focus would place the optical center of the lens 20 mm from the focal plane? Wouldn’t the rear of the lens protrude into the mirror? This called for optical trickery, something called a “retrofocus” lens design—a lens that could be mounted a lot farther from the focal plane than its focal length indicated.

Needless to say, a retrofocus design adds considerable complexity and cost to lens design and manufacture, including additional glass elements. But what if the mirror box or spinning mirror shutter no longer existed because of solid-state image sensors and their electronic viewfinders? This, then, is what Sony pioneered in 2013, a “mirrorless” camera featuring a novel E-mount with a short flange focal depth of merely 18 mm. Arriving since then, oh so gradually, has been a series of near-identical short-flange mirrorless focal depths: Canon RF, Nikon Z, Leica L, Fuji X, Micro Four Thirds, etc.

The resulting simplification in lens design has contributed to an outpouring of inexpensive but relatively impressive lenses, some with novel focal lengths and capabilities, mostly from China, which I’ve mentioned previously in this magazine. In late 2023, venerable Cooke Optics inaugurated its latest product category, compact cine lenses for full-frame mirrorless cameras. Cooke touts its new SP3 set of T2.4 prime lenses—25, 32, 50, 75, 100 mm—as based on its legendary Speed Panchro design from the 1920s. The full set lists for $21,375 at B&H and is back-ordered. For context, this is less than two-thirds the cost of a single full-frame Cooke S8/i prime.

By the way, the “i” in Cooke S8/i stands for Cooke’s /i Technology, a trailblazing open metadata protocol Cooke developed and integrated into its lenses several decades ago. Lenses with Cooke /i communicate lens data by means of electrical contacts on a PL mount. Lately, lens metadata, camera metadata and positional tracking, all on a per-frame basis, have become indispensable to real-time CG pre-visualization, in-camera compositing inside LED volumes and exterior film sets with near-real-time (NRT) compositing on exterior film sets. In early 2023, Cooke Optics reached across the English Channel to partner with French company EZtrack, a manufacturer of camera tracking and data aggregation tools. The result is an NRT system that ingests metadata from cameras and Cooke /i lenses for each take, then merges and renders CG elements so that director and crew can play back a rough composite almost immediately, blurring boundaries between production and post.

Later in 2023, rival lens maker ZEISS acquired U.K. firm Ncam Technologies, a camera and lens-tracking system that interfaces with ZEISS’s own smart lens system, eXtended Data (“XD”), as well as Cooke /i and ARRI’s LDS. Rechristened CinCraft Scenario, the ZEISS approach is uniquely hybrid. It can utilize conventional reflective dots placed nearby to track camera position, but it can also rely solely on fixed features in the physical environment, which it scans to form a 3D depth map of its surroundings. Both the Cooke-EZtrack and ZEISS-Ncam collaborations point to a growing role for camera and lens metadata in working with virtual sets.

Early in November, Sony announced a new mirrorless camera optimized for sports, the A9 III. It deserves mention here because it is the world’s first full-frame camera with a global shutter. You may remember that Sony’s F55 from a decade ago featured a Super 35 global shutter. Blackmagic URSA and URSA Mini 4K S35 cameras once had global shutters, too, as did Canon’s S35 C700 GS PL Cinema Camera. All of these cameras are discontinued. Today, there are two 6K cine cameras on the market with S35 global-shutter sensors, RED’s Komodo and Z CAM’s E2-S6G. That’s about it.

Why do we desire global shutters? A global-shutter CMOS sensor exposes all pixels in a frame simultaneously. This means every part of each frame is exposed in the same instant, as is the case with motion picture film cameras. Sounds simple enough, but a global-shutter CMOS sensor must also read out all pixels before exposing each new frame. That’s quite a trick, one that often requires an intermediate storage step. The knock against global-shutter sensors is that, as a result, they are a stop or two slower, deliver a stop or two less dynamic range, with more noise.

This is why most digital cameras opt for a simpler “rolling shutter” that exposes and reads out pixels sequentially, one line at a time, from top to bottom of each frame. This top-to-bottom sequence means that what is recorded by the top row takes place a split second earlier than what is recorded by the bottom row. If a camera is whip-panned or if a moving object speeds across the frame, the outcome is a skewing of vertical image detail, however momentarily. Thankfully, today’s rolling shutter sensors have high-speed readouts and are outstanding performers—that is, until you fast pan and your images bend a little, or you film a flash photography scene and the camera flashes look weirdly bisected upon playback.

To put an end, once and for all, to skewed images from full-frame sensors, the solution is obvious. Unfortunately, the larger the sensor and the more pixels it contains, the harder it is to engineer a global-shutter design that would be competitive, given today’s high expectations of wide dynamic range and no noise. The F55’s S35 CMOS sensor, for instance, contained a mere 8.9 million pixels compared to the A9 III’s full-frame 24.6 million pixels. Hats off to Sony for tackling this challenge with its A9 III. What sports photographer who shoots fast-action stills for a living wouldn’t want a full-frame camera free of image skew? Especially if that camera captures full-frame RAW stills in bursts of 120 fps! (Cartier-Bresson, eat your heart out.)

As a 4K video camera, the A9 III is equally impressive. It downsamples 4K from its native 6K at up to 120 fps with no crop. Internally, it records 10-bit 4:2:2, and externally, it outputs 16-bit RAW via a full-size HDMI port. As do other Sony pro video cameras, it provides S-Log3 (ISO 2000) and S-Cinetone (ISO 320).

While it’s true that the readout from a Venice 2’s full-frame 8.6K rolling shutter sensor is lightning fast and virtually eliminates skewing, the little A9 III, which costs 10 times less than Venice 2, still comes out ahead. Why does this matter? A global shutter benefits motion tracking and compositing and VFX in general, where any trace of rolling shutter artifacts can be problematic. A global shutter might also prove advantageous in working with LED walls and volumes with varied refresh rates.

A rumor of another Sony pro video camera coming soon is making the rounds, possibly something in the FX family. You may recall that Sony repackaged the current a7S III, adding a cooling system and inserting it into its Cinema Line as the popular FX3. It’s no stretch to imagine Sony creating a Cinema Line version of the A9 III. Sony is the world’s largest sensor manufacturer and, I would argue, the most advanced. Developing new sensors is always expensive and time-consuming, and Sony, like all manufacturers, targets all its markets to amortize these costs. This is why camera products at both price extremes can contain the same Sony sensor technology, from iPhones to the Venice 2. It’s why essentially the same Sony sensors find their way into a range of Sony cameras (and cameras by other manufacturers) of different shapes, sizes, functionality and use grades, from consumer to professional, still and motion picture.

Indeed, this fall, Time magazine named Sony’s FX3 as one of its “Best Inventions of 2023.” (Little matter that the FX3 was introduced in spring 2021.) As reason for the accolade, Time cited the fact that British director Gareth Edwards (Rogue One: A Star Wars Story) had filmed The Creator, his $80 million sci-fi epic about war between humans and AI, on FX3s. Tagged by The Guardian as “one of the finest original science-fiction films of recent years,” The Creator has been playing in theaters since September.

The FX3’s diminutive size, coupled with lightweight Ronin gimbal stabilizers, enabled Edwards to do most of his own operating on location in Thailand. But, said DP Oren Soffer in an interview, what “unlocked the camera for us” in terms of premium image quality needed for an effects-laden film was the FX3’s ability to output 4K ProRes RAW to an Atomos Ninja V+, bypassing Sony’s internal encoding and XAVC compression. Soffer added, “This footage could intercut with, or be completely interchangeable with, Alexa, and nobody would really notice.”

In September, Sony did announce a new $25,000 cine camera, a mini-Venice called “BURANO” (an island in the Venetian lagoon), which will arrive in spring 2024. For less than half the cost of an 8K Venice 2 body ($58,000), you get the same 8.6K full-frame sensor, dual base ISO of 800 and 3200, and 16 stops of latitude. BURANO fills a yawning product gap between the high-end Venice 2 and Sony’s 6K full-frame FX9 ($10,000).

Actually, another compact full-frame 8K camera at the same price point already filled this gap two years ago; however, it wasn’t a Sony. RED’s innovative V-RAPTOR 8K Vista Vision features a locking RF lens mount (Canon’s answer to Sony’s mirrorless E-mount, mentioned above) and, in a first for RED, a full-frame sensor incorporating phase-detection autofocus pixels (PDAF) to enable focus tracking of faces, eye autofocus, etc., when RF autofocus lenses are used. PL-mount lenses can be attached, too, using an optional RF-PL adapter. The adapter includes a built-in electronic 7-stop ND filter and pass-through contacts for Cooke /i and Zeiss XD lens data. A battery V-mount occupies the camera’s rear.

BURANO matches each of these features; then, it takes them to the next level. BURANO’s PL mount comes already attached, providing contacts for Cooke /i and ZEISS XD lens data. Remove six screws, and underneath is a locking E-mount with gold pins for lens communication. This means that any E-mount lens with autofocus and optical image stabilization will offer these capabilities when attached to BURANO. BURANO’s tour de force, though, is IBIS, or in-body image stabilization, another feature carried over from mirrorless cameras. IBIS brings image stabilization to any attached lens, E-mount or PL. Using an internal gyroscopic sensor, IBIS counteracts shifts in yaw, pitch and roll by dynamically moving the sensor, a technique known as 3-axis stabilization. When an optically stabilized E-mount lens is attached, contributing two more vectors of active stabilization, BURANO provides 5-axis stabilization. BURANO is the first digital cinema camera to offer 5-axis stabilization, not counting Sony’s FX3 and S35’s sibling, the FX30.

Another welcome innovation in BURANO is a variable electronic ND filter that can continuously adjust from two to seven stops, making possible an auto-ND function. Auto-ND is handy when stepping from bright outdoors to dim indoors because, unlike auto-iris, it doesn’t incur a big shift in depth-of-field. For the record, no camera has ever before combined IBIS and an electronic ND system. This represents both a feat of physical design and stepped-up camera computational power.

BURANO isn’t perfect. Its “viewfinder” is one of those awkward viewing tubes attached to an LCD that Sony prefers on its lower-tier cameras. I find the optional attached arm and grip flimsy. Of Venice’s three X-OCN quality levels, OCN, or original camera negative, is Sony’s oblique, misleading name for 16-bit compressed RAW—only the “lite” version, X-OCN LT, is available for internal 8K recording in BURANO. Alternatively, for 8K, BURANO internally records 10-bit XAVC H, up to 1200 Mbps. When in S35 mode, BURANO captures 5.8K. Shooting at 120 fps drops the scan to 4K.

BURANO is about two-thirds the size and weight of the Venice. I’m guessing Sony has taken onboard the lesson of the Alexa Mini, introduced in 2015 as a smaller, lighter, stripped-down version of Alexa, a specialty camera for tight shooting situations that ended up eating Alexa’s lunch in indie filmmaking and pretty much anywhere that shoulder rigs, gimbals and Easyrigs were popular. I notice that when Sony promotes the upcoming BURANO, it emphasizes that this capable little camera will be for “one-person style” production by “solo shooters” in “solo-operation.”

Lighting

The past decade in lighting tech has seen a shift in momentum from bi-color LED panels (daylight/tungsten) to COB (chip-on-board) lights, those compact instruments with a big yellow dot in the middle that lend themselves to traditional modifiers like barn doors, reflectors and fresnel lenses. Wireless operation via smartphones has also grown in popularity, as many LED units are now RGB and can mimic classic Rosco and Lee filters, not to mention red/blue police lights, flickering TVs, lightning and lazy fires. LED lights in general increasingly come in all shapes and sizes, including fabric rolls and, of course, walls.

What remains a challenge is the pleasing reproduction of skin tones, a consequence of the fact that LED phosphors fail to reproduce the full-color spectrum that humans evolved to see and enjoy, combined with the fact that digital cinema cameras impose their own spectral sensitivities and limitations, proprietary to their manufacturers.

It has become evident that red, green and blue LEDs are not enough to provide the full color gamut necessary to reproduce accurate skin tones. ARRI’s Orbiter and Prolycht’s Orion series, for instance, incorporate RGBACL, a mixture of red, green, blue, amber, cyan and lime LEDs. Aputure, on the contrary, uses RGBWW, a mixture of red, green, blue and two white LEDs, basically daylight and tungsten. (In August, Aputure acquired Prolycht. At IBC in September, they jointly announced a future LED mix that would combine the best attributes of RGBWW and RBGACL.)

Where does this leave stages with LED walls or volumes made of RGB panels? These LED walls were expensive to install; they likely haven’t made back their investment… and now they need to be replaced because skin tones don’t look their best? And where does this leave the manufacturers of LED walls, who have to decide exactly which multi-LED technology should be adopted for continuous, non-spikey color? After all, their largest markets are advertising displays and video signage, where current RGB technology already succeeds splendidly and where price competition is rising. Just how many stages with LED surround volumes, with subjects lit from multiple angles by RGB walls, are there in the world, anyway?

Nevertheless, almost all manufacturers of video walls used in virtual production are now developing RGBW tiles, with the addition of at least one white LED for better color rendition. I don’t believe any of these are on the market yet. Meanwhile, DPs working with LED volumes and walls are making the best of it, sometimes complementing the RGB wall lighting falling on their subjects with additional lighting from sources with more complete color spectrums, sometimes not. Of course, there’s always downstream color grading to fall back on, although additional color grading doesn’t do the post budget or schedule any favors.

As if shooting with LED walls needed any further complication, a new lighting category called image-based lighting (IBL) is emerging. IBL is a blurring of lighting and video where a video signal is fed into a special RGBWW panel light or into a spatial arrangement of RGBWW lights. The video signal is not displayed as a sharp image but instead rendered as a fuzzy moving image with a high light output, much brighter than any video display. The idea is to produce dynamically moving lighting on subjects which is motivated by, or coordinated with, the action in a 2D background plate or 3D volume. Because a video image feeds the IBL system, the IBL is usually genlocked to both the camera and the LED wall or volume.

Like all lighting, IBL remains outside the frame. In a video wall setup, with a camera facing a foreground subject against a video wall in the background, IBL can add lighting effects that might seem to come from a second large video wall behind the camera. (Think nighttime poor man’s process shot, with offscreen lights passing to the side and overhead.) At NAB 2023, IBL was demonstrated by Quasar Science, Kino Flo and others, including a beer-budget setup consisting of an Accsoon SeeMo Pro using Prolycht’s iOS Chroma lighting control app to manage up to eight Prolycht lights. A color picker in the Chroma app was used to select up to eight small zones in a video image sent by the SeeMo Pro, and the lights then actively mimicked the color and brightness of whatever video played in those zones.

Artificial Intelligence

AI Will Smith launched 2023 eating spaghetti and, eventually, meatballs, courtesy of ModelScope from DAMO Vision Intelligence Lab. The year concludes with Stability AI’s release of Stable Video Diffusion, a free AI test platform that conjures short videos from stills. While this latest image-to-video synthesis is capable of creating a few seconds at most, the spaghetti… er, writing, is on the wall.

All creative and moral panic aside, most AI or machine learning will be trained not as generative models but instead as tools for deep analysis, taking on nearly impossible predictive tasks in diagnostic medicine, virology, chemistry, agriculture, aerodynamics, meteorology, earth sciences, oceanography, astrophysics, etc. The benefits to humanity will be ceaseless—if we can manage to stick around the planet long enough.

In this optimistic vein, technologist extraordinaire Michael Cioni has quit his job at Adobe and joined his brother, Peter, who quit his job at Netflix, to start Strada, a company determined to channel emerging AI services relevant to media makers into a single cloud platform designed to be easy to navigate. You may remember Michael as the founder and CEO of Light Iron, then as Panavision’s VP behind the Millennium DXL camera; next, global senior VP of innovation at frame.io and, finally, senior director of global innovation at Adobe. He certainly knows what he’s getting himself into. (Cloud-based computational post services for film and video are not new. Check out cinnafilm.com.)

In an interview in The Hollywood Reporter, Cioni said, “We’re starting a new company that is focused on AI technology for workflow.” He sees AI as a useful utility to automate tasks like transcoding and syncing, not as an intruder into creative realms like editing and grading. His goal is not to create the AI tools himself but to vet, shape and aggregate those developed by others, all under the Strada umbrella.

If only someone would just point AI toward dust-busting and scratch removal for film scans!