Back to selection

Back to selection

Digital Motion Picture Cameras in 2017: New Images for the 21st Century

As my seventh annual camera round-up for Filmmaker goes to press and online, NAB 2017 has just wrapped, and one major take-away is clear: the march towards full-on realism — visual sensations so real that images appear palpable — is in its infancy.

[Author’s note: this deep dive into camera tech was written for the Spring 2017 issue of Filmmaker. Despite arriving online at a later date, it remains timely and informative. Stay tuned for my Digital Motion Picture Cameras in 2018 in Filmmaker, coming soon!]

Call it hyper, call it immersive, call it virtual, the fact is that display technology is fast advancing, at least at NAB. Will projectors be replaced by enormous emissive screens? Will the shared audience experience fundamental to theater since the time of Aeschylus survive the solipsism of VR?

Film theorists have long likened the big screen to a window or a mirror, an instrument of voyeurism or simulacrum of a dream. Buster Keaton in 1924’s Sherlock Jr. depicted both possibilities when, as a sleepy film projectionist, he nods off and dreams of himself breaking the silver screen’s fourth wall. His goal is to rescue his sweetheart after he, daydreaming within his own dream, imagines her onscreen, in the clutches of an unscrupulous cad, a dastardly fellow who’d earlier framed Buster for theft and was now putting the moves on Buster’s girl in front of an entire paying audience! (This being a Keaton film, Buster is picked up by his breeches and tossed back out of the projected image, landing smack on his keister. Reentering the screen, he next runs afoul of disjunctive film editing.)

Like Buster’s projectionist, we can now step into the narrative on the other side of the screen by slipping on a pair of VR goggles. We can look around, size up the situation on our own. Unlike Buster, we can’t really “take action” or move around inside the stereoscopic 360-degree videos that rely on these goggles. For one thing, we’re blindfolded like a hooded falcon, our ears insulated by headphones. Moving about would mean crashing and tripping over nearby objects, which could cause authentic pain.

I’m using VR and 360-degree video to frame my discussion of camera trends in 2017 because VR takes cinema’s purveyance of fantasy to its logical extreme where the individual is concerned: a synthetic solo experience as singular and vivid as an acid trip. The dream factory as headgear.

To pull this off, however, the latest Oculus and Vive headsets have a long way to go. They manage slightly more than 1K per eye, resulting in a noticeable “screen door” texture that degrades today’s 360-degree video experience. Since VR headsets maintain a close distance to the eye, their displays probably will need 4K or even 8K per eye to make pixels vanish entirely.

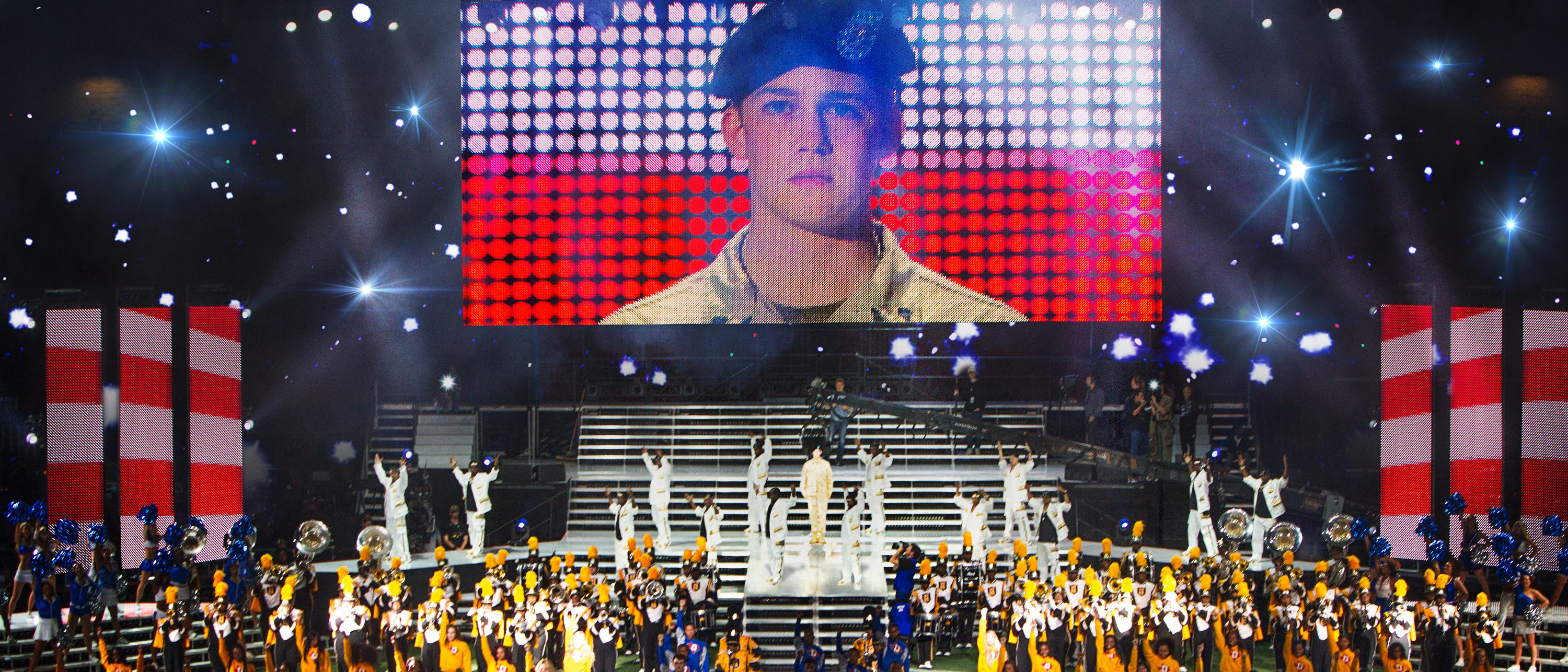

Meanwhile, in the realm of theatrical cinema reaching for hyperrealism, 2016 was the year of Ang Lee’s Billy Lynn’s Long Halftime Walk, the first theatrical feature shot in 3D, 4K, HDR (high dynamic range), HFR (high frame rate of 120 fps), and WCG (wide color gamut), requiring dual state-of-the-art Christie RGB laser projectors to achieve brightness levels twice the norm.

Did it deliver? Depends upon whom you ask. Some scorned it as live TV on steroids. After all, Billy Lynn was shot in accordance with the new Ultra-high-definition television (UHDTV) standard, Rec. 2020, which supercedes the aging Rec. 709 standard for HD. Lee’s 3D rig comprised two Sony F65s.

Others, like me, consider Lee a true cinéaste and Billy Lynn a bold artistic experiment placing today’s best digital camera technology at the service of novel narrative ends. Admittedly, not all experiments succeed, or succeed in their entirety. (Which in science goes without saying; in art, can be hard to acknowledge.) I saw Billy Lynn in a 3D theater several times, projected as intended, and I continue to think that this film represents a cinematic breakthrough of the first order, a rethinking of mise-en-scène in 3D space, with implications for syntax, acting, and lighting.

In any case, Billy Lynn anticipates where the 21st century is taking motion pictures, at least those displayed on two-dimensional screens. What lies in store are exponential increases in spatial resolution, temporal resolution, color depth, brightness, and dynamic range. The end-goal will be flat images so “life like,” so finely detailed, so rich in texture and apparent perceptual depth that viewers will forget they’re looking at mere displays. (Take that, VR headsets, with your color fringing and murky resolution!)

Naturally, cameras are involved in this. But before we plunge into the latest camera tech, a cautionary note.

When Steve Jobs demonstrated the first-ever Wi-Fi in a laptop during his 1999 MacWorld keynote in New York (I was there), or introduced his iconic smartphone eight years later, neither he nor his cheering audiences could possibly have imagined that someday their vaunted tools for democratizing cyberspace could be hijacked and twisted to poison the public commons with falsified news and hate speech. This is a textbook example of Robert Merton’s law of unintended consequences.

In the graphic arts, both coarse dabs and finely etched lines have their expressive purposes, and I see no reason for today’s fast-evolving cinema tech to be an exception. We can marvel at NHK’s stunning 8K, now available via satellite — we’ll get to that — just as we can be moved by the sumptuous texture of Kodak’s newly restored Super 8 Ektachrome. (Has their Super 8 camera shipped?)

A century of cinema classics shot on grainy film at 24 fps is all the proof I need that resolution is never enough. Not one of these works would have been improved, even in the slightest, by today’s 4K digital origination. With their flickering, jumpy images — photomechanical patina — they preserve an imprint of the past, lost modes of dress, speech, behavior, outlook and attitude — in perforated amber. Priceless.

This is where the law of unintended consequences might someday seek an opening for mischief, if we’re not vigilant. While advancing the technology of our cameras and displays, we’ll want to avoid throwing out the baby — cinema’s mystery and magic — with the bath water of yesterday’s technology. This is why I roll my eyes with every pronouncement by some new streaming entity that only 4K will be accepted as an origination format. We’ll be the poorer, visually and graphically, if this trend continues.

In my 2015 camera round-up, I cited advances in modularity, materials, ergonomics, wirelessness, camera apps, IP connectivity, sensor windowing, phase-detection autofocus, small sensors, and mirrorlessness in still/video hybrids with shallower lens mounts. In last year’s 2016 camera round-up, I mentioned iPhones, 4K, H.265, 8K UHDTV, tonal & temporal resolution, Rec. 2020, HDR, HFR, and described updates to lineups from Sony, Canon, Panasonic, ARRI, RED, and Blackmagic Design.

These subjects remain topical, so I won’t revisit them here.

What became clear at this year’s NAB is that camera manufacturers have positioned their mainstay camera platforms in the marketplace, and that, for the time being, they intend mainly to enhance these platforms with occasional firmware updates. (RED-style sensor upgrades remain a thing of the future for most of them.) There were no replacements at the Sony booth to the existing F65, F55, F5, FS7, and FS5 line-up. Panasonic unveiled no new production cameras, VariCam or otherwise, at their press conference, nor did Blackmagic Design. Not a single mention of CION was heard at AJA’s press conference or found in their press kit. ARRI didn’t bother to hold a press conference. RED bailed entirely, opting out of NAB and choosing instead to place all its eggs in the basket of June’s Cine Gear Expo in Los Angeles.

This left Canon and Panavision to divvy up the faithful, those like me who attend NAB each year to get a jump on new cameras. Who now must ask the hard question, has NAB lost its mojo?

Tweaks to existing cameras seem increasingly to appear at random. Late last autumn Sony announced an upgraded FS7 Mark II, borrowing Sony’s remarkable Electronic Variable ND filter system from the popular FS5 and introducing a new, fortified locking E-mount. Little more than a month before NAB, with no apparent calendar logic, Blackmagic Design launched a “Pro” version of URSA Mini 4.6K.

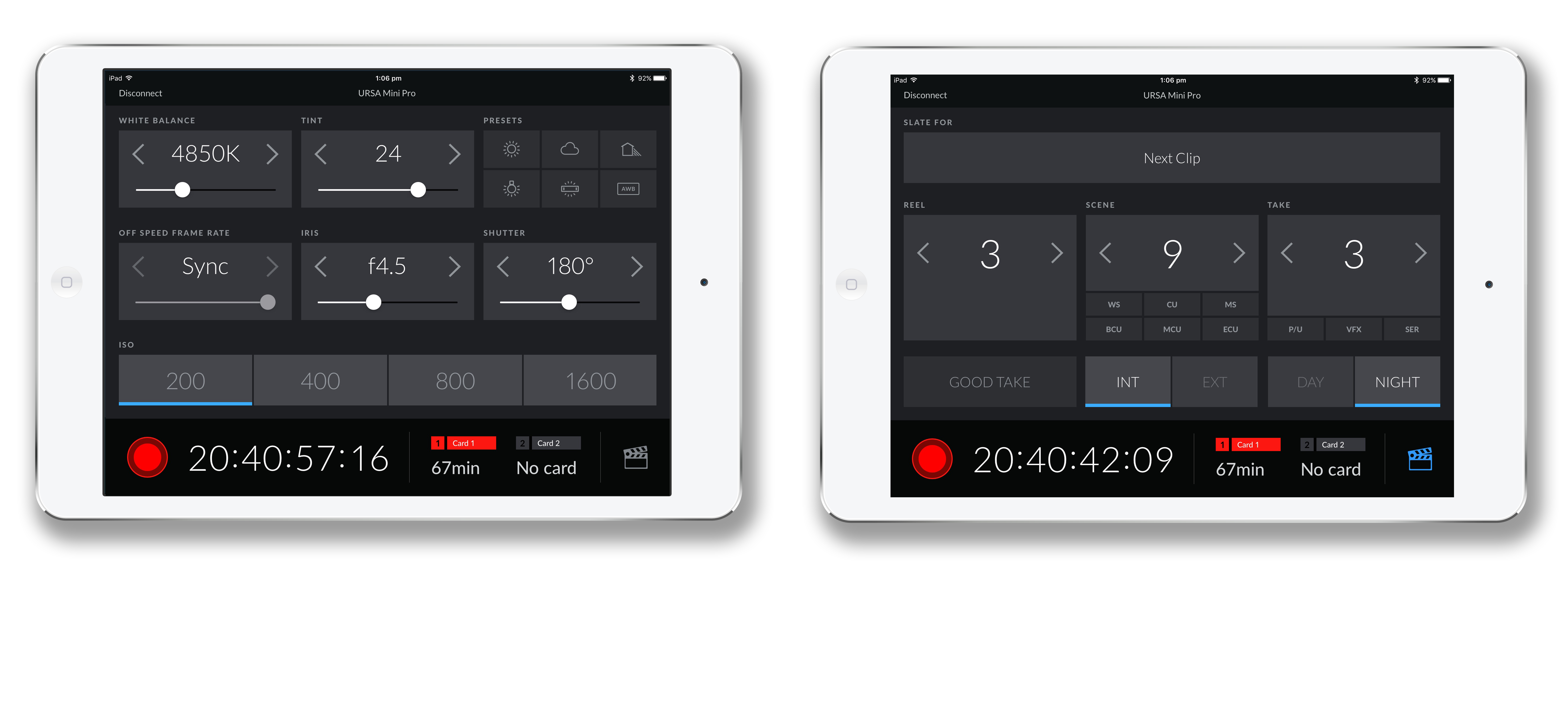

At their NAB press conference, Blackmagic mentioned their shiny new URSA Mini Pro 4.6K only in passing, in the context of a free upcoming firmware update that supports Bluetooth control of functions and metadata via iPad, “a hidden secret feature we didn’t tell anyone about,” per founder Grant Petty. Petty also announced a giveaway of the app’s API and source code so people can “do their own customization, because I think there are all sorts of things we haven’t really thought about.”

For one thing, Blackmagic’s Bluetooth control can access and operate multiple cameras at once. Camera control and iOS app designers, rev your engines!

Notwithstanding, URSA Mini Pro 4.6K is a significant upgrade over the previous URSA Mini 4.6K, while retaining its sensor, performance, and body profile. Obvious upon first glance is the fact that the sparse older design has given way to a full count of ENG-style toggles, switches, buttons and dials, including a backlit digital-cinema-style interface for camera set-up. Eye-catching and transformational in terms of operator interface.

While previously there were two URSA Mini 4.6K models, one with EF mount and the other with PL, now there is but one Mini Pro model with user interchangeable mounts. EF is the supplied mount, but with the proper torque wrench anyone can convert EF to PL (Cooke/i lens data support), Nikon, or B4. With the B4 mount, it’s a cinch to shoot windowed HD because URSA Mini Pro provides a built-in 12-pin Hirose lens-control connector.

More goodies include built-in ND filters with infrared (IR) compensation, a standard rotating ND selection knob, and two sets of card slots for recording to either CFast 2.0 or cheaper SD cards.

NAB was a chance to kick the tires on Canon’s new 4K workhouse, the EOS C700 Cinema Camera, introduced last September in Amsterdam at IBC, on which Canon has pinned its hopes for episodic TV and cinema production.

At $28K for a basic body with EF or PL mount (no viewfinder), the C700 is comparable to Sony’s F55 in price, size, and elongated profile. When Sony introduced the F55 in 2012, it also introduced a substantially cheaper, lower-tiered model, the F5, with an older sensor and no internal 4K recording. Canon takes a different route. From the sensor backward, there is but one version of the C700; while from the sensor forward, there are three combinations, based on selection of one of two lens mounts and two sensors.

You can buy a C700 with either a PL mount with Cooke/i lens data contacts, or Canon’s own “Cinema Lock” EF mount with a rotating clamping collar similar to PL (“positive lock”). You can switch to the other mount as upcoming projects dictate. Unfortunately, this is not a DIY operation. Downtime and round-tripping your C700 to a Canon Service Center will be necessary.

As of this writing, the available sensor is the 4K Dual Pixel CMOS AF (AF = autofocus). Canon says a C700 GS PL model (GS = global shutter, PL = lens mount) will arrive in July. This 4K global shutter sensor is not Dual Pixel AF. It cancels the autofocus advantage of EF lenses, hence a PL version only. A GS PL body will set you back $30K, $2K more than the Dual Pixel CMOS AF model. Like the lens mounts, Canon designed these sensors to be interchangeable at a Canon Service Center. Global shutter sensors à la carte will become available later this year, price and cost of servicing t.b.d.

A key advantage of the EF mount + 4K Dual Pixel CMOS AF combo is that it offers the best autofocus in the business. Autofocus is not intrinsically consumer or professional, it’s just another tool. In the case of the C700, autofocus leverages the fast, quiet, ultrasonic focus motor (USM) technology of popular Canon L Series glass.

A disadvantage of a Dual Pixel CMOS AF, however, is a rolling shutter design that under the wrong circumstances can produce skewed images during fast panning. Canon’s advanced 4K Dual Pixel CMOS AF sensor suppresses this effect but cannot eliminate it entirely.

Canon’s C700 electronic global shutter prevents “jellocam” altogether. Sports coverage, for one, is an obvious beneficiary. As is typical of global shutter sensors, there is a loss in dynamic range of one stop. (I’ll have more to say, below, about phase-detection autofocus and rolling shutter.)

The C700 matches other 4K cameras in its class in its wide range of internally recorded frame rates and compression formats, which includes various grades of Canon’s XF-AVC and ProRes recorded to CFast 2.0 cards. (Use of ProRes is a welcome first for Canon.) In a neat trick, an SD card can simultaneously record small 8-bit XF-AVC proxy files for immediate editing. Available to tame the video tonal scale is now-familiar log gamma, in fact three of them: Canon Log, Canon Log 2, Canon Log 3. (Because, I suppose, you can’t have too many.)

The C700 can output DCI 4K (4096 x 2160) as 12-bit uncompressed RAW, recorded to an optional Codex Digital RAW recorder with the latest 1 TB and 2TB Codex Capture Drives. (Same Vers. 2.0 drives used in ARRI’s Alexa SXT and Panasonic’s V-RAW Recorder for VariCam 35.) This recorder is docked to, and operated by, the C700. Docking eliminates cables and a separate power supply, but it’s worth noting that, as a piggyback module, the Codex Recorder adds length to the C700, which typically gets lengthened again by the addition of a heavy brick battery. Something to be aware of when mounting or handholding the C700 in tight spaces.

Canon as a manufacturer of cameras and lenses occupies a unique space in the domain of color reproduction, which remains as much art as science, given the subjectivity and metameric inconstancy of human vision. With leading products spanning the fields of photography, video, printing, photocopying, medical imaging and electronic display, it can be said that color imaging is Canon’s raison d’être if not religion. (The name Canon derives from Guan Yin, “goddess of mercy.”) The “Canon look” has its enthusiasts and not surprisingly, C700 early adopters are upbeat, reporting striking natural skin tones in 4K (Canon Log 2), color consistency in under- and over-exposure, and usable ISO up to 6400 with rich blacks.

Speaking of London-based Codex Digital: they once supplied a bulky, external Codex Onboard RAW recorder that ARRI Alexa used to capture uncompressed ARRIRAW. Then in 2013, Alexa switched to convenient in-camera RAW recording thanks to an internal module Codex developed for ARRI. One less barnacle encrusted to the camera.

About a year later, Panasonic and Codex announced a docking RAW recorder for VariCam 35, adding a chunk behind the camera, much like Canon’s. Available since 2015, the Codex V-RAW Recorder can be seen in the illustration above, on the left, bolted at the end of the VariCam 35. Imagine the addition of a large brick battery and you can see a bazooka taking shape.

At IBC last September, Panasonic and Codex demonstrated the fruit of their latest collaborative effort, the VariCam Pure, a VariCam that records only RAW, nothing else. In the illustration above, both camera modules are a VariCam 35. Creating the new VariCam Pure on the right involved excising the middle section of the camera on the left, including the control interface with six buttons, and bolting on a new Codex V-RAW 2.0 recorder. Lighter, reduced profile, less power draw. Brilliant.

But what if you need to review takes, add LUTs and nondestructive grades (ACES, CDL), sync sound, transcode to ProRes, DNxHD, etc. for proxies, dailies or deliverables, or manage archival backups to LTO? Codex’s software-based Production Suite, which runs on a MacBook Pro or Mac Pro, ingests V-RAW files and then provides for these common needs and almost every other RAW workflow requirement I can think of. (Does same for ARRIRAW and Canon RAW.)

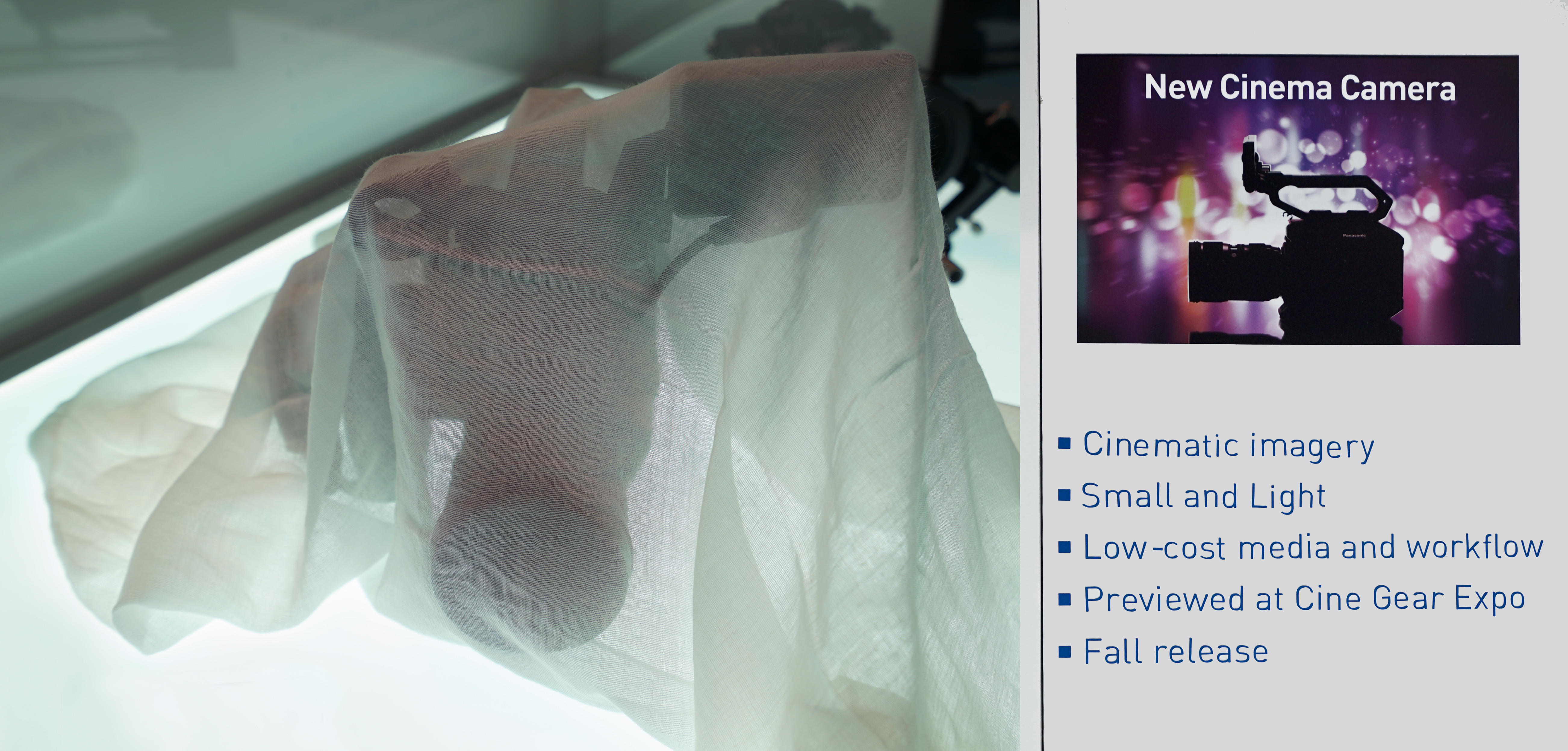

So perhaps I should backpedal with regard to Panasonic at NAB. Not only did they demonstrate and discuss VariCam Pure on the floor and in side meetings, they also announced their upcoming “preview” of a new camera at June’s Cine Gear Expo, a prototype of which they coyly hid under a swath of cheese cloth at their booth. They hinted something about a hole in their large-sensor camera line-up which they intend to fill. A deep crater would be more like it. They have nothing between a GH5 at $2K and an outfitted VariCam LT at $25K.

At a glance, Panasonic’s mystery silhouette looks like competition for Sony’s FS5, Canon’s C100 Mark II, and Blackmagic’s URSA Mini Pro 4.6K, all sub-$6K. Panasonic said its mystery cam would use affordable media, implying SD instead of VariCam’s expressP2 ($1,825 for 512GB). But an acquaintance of mine under NDA seemed unusually excited about this camera, so I’m wondering whether, in fact, it might contain what the still photographers insist on calling “full frame,” and what we cinematographers call “8-perf” after the sideways VistaVision format of the 1950s.

I could be wrong about this camera in particular, but an affordable digital motion picture camera with a full-frame sensor would make a loud splash. Since the arrival in 2008 of the epochal Canon EOS 5D Mark II, full-frame DSLRs with rudimentary video recording have created gorgeous HD and lately, 4K too. But aside from the toe-dip into the prosumer market that was Sony’s NEX-VG900, “World’s First 35mm Full-Frame Handycam Camcorder,” which decidedly failed to set the world on fire in 2012, there’s been zilch.

Heck, even my full-frame mirrorless Sony a7S, which is CMOS and can therefore window down to APS-C as needed, produces striking full-frame HD. What’s the snag in introducing a full-frame cine camera?

I can think of two hurdles that would have to be overcome. And neither involves lens choice — although this can be an issue too, which I’ll come back to. Because it goes without saying that all of Canon’s superb L-Series EF-mount lenses, in wide use, already cover 8-perf. The same holds for full-frame lenses from Nikon, Olympus, Minolta, Tokina, Sony – actually any lens ever made for full-frame DSLRs, SLRs, or 35mm rangefinders.

Hurdle #1 is super-shallow depth-of-field at wide apertures compared to conventional S35mm or APS-C. Focus-pullers suffer enough anxiety as it is. Why further drive them to drink? Photographers with full-frame cameras can, if they choose, rely on fast, modern autofocus to freeze moments in time, but motion picture cameras follow moving subjects and must keep them in focus at all times. Motion picture focus is traditionally adjusted by hand for reasons of artistic control – selecting areas of interest, splitting focus as necessary, pulling focus at appropriate speeds – although it, too, could sometimes benefit from automation. Think rambunctious, rapid-fire Armando Iannucci-style improv, and what it would take to ensure 100% focus accuracy so that every fleeting angle is usable.

Hurdle #2 is the rolling shutter found in most CMOS sensors, as mentioned above. Think whirling helicopter blades, which can appear to bend like boomerangs. Buildings that momentarily lean right or left while panning. Flaws generally not entirely fixable in post, even with warp stabilization. Jiggly handheld work is particularly vulnerable to such rolling shutter artifacts.

A Jell-O effect occurs because pixel exposure values are not read all at once, in the same instant. They’re read out row by row instead. This begins at the top row of the sensor and proceeds sequentially to the bottom. If the camera is whip-panned while pixels rows are being read out, the top and bottom of the resulting image are offset because the camera’s field of view has shifted in the meantime.

Full-frame sensors possess twice the surface area and at least twice the number of pixels as comparable S35 or APS-C sensors, so they take longer to read out, worsening jellocam.

The first hurdle to the use of full-frame sensors – razor-thin focus at wide apertures – can be overcome by fast and precise wireless focus tracking, using kits designed to constantly measure subject distance. These systems comprise a sensor unit attached to the camera above the lens, a wireless handset with focus knobs, and a small picture monitor with distance readouts or graphic overlays to indicate where focus lies. They enable remote manual focus control, and they can also automate focus tracking, akin to consumer autofocus. Popular systems include the infrared Preston Light Ranger 2, infrared laser Wards Sniper MK 3, and ARRI’s Ultrasonic Distance Measure UDM-1 kit used with their Wireless Compact Unit WCU-4 for full lens control of Alexa and Amira cameras.

Note that The Revenant (2015), shot with ARRI 65, a “medium” wide format equivalent to 5-perf 65mm negative film, managed to stay in focus; so too, no doubt, will Guardians of the Galaxy Vol. 2, the first film shot with a RED Weapon 8K VV in a format roughly equivalent to 8-perf VistaVision or full-frame, which will have opened widely by the time you read this.

Another path to reliable focus when shooting motion with a full-frame sensor is offered by in-plane (built into the surface of the sensor) phase-detection autofocus. Emblematic of this approach is Canon’s Dual Pixel CMOS AF, incorporated into their entire S35 Cinema EOS line-up, from C100 Mark II to the new EF-mount C700 described above.

What distinguishes Canon’s Dual Pixel CMOS AF is that all of its effective pixels can simultaneously perform both imaging and phase-detection tasks. Each “dual” pixel incorporates two independent photodiodes. Each photodiode pair sees incident light from two angles due to different placement under the curved microlens they share. This is the basis of a distance-detection technique not unlike split-image focusing in a vintage 35mm SLR viewfinder.

Canon’s full-frame EOS 5D Mark IV, equipped with Dual Pixel CMOS AF, autofocuses continuously when capturing HD video, just as it does in Live View for snapping photos. (Due to a 1.74x crop when shooting 4K – an upcoming firmware update will reduce this to 1.27x – the 5D Mark IV doesn’t deliver full-frame 4K.) Like many autofocus cameras today, still or video, the 5D Mark IV also offers “face detection,” a capability to continuously track and focus moving objects such as human faces. An indication of how thoroughly Canon has thought through the underlying issues affecting successful autofocus can be found in the 5D Mark IV user manual, which devotes fifty pages to customizing focus zones, setting servo sensitivity for subject-tracking, and fine-tuning AF for subjects who move erratically or change speed or pass behind obstructions. Four pages alone list a whopping 250 lenses and their degree of compatibility with Dual Pixel AF.

So why can’t Dual Pixel CMOS AF distance data be also exploited as the basis of a remote wireless handset with focus knobs for manual focus control? I’m not talking about a simple lens-control hack like Aputure’s DEC Wireless Focus & Aperture Controller Adapter for EF Lenses to E-Mount; I’m talking about an affordable wireless handset with a picture display, access to Dual Pixel AF distance information, and two-way communication with a Canon camera that would enable reliable remote-control focus pulling. All of the Cinema EOS cameras including the C700 already make this information available in the viewfinder as a guide to manual focusing, a feature called Dual Pixel Focus Guide. How cool would it be if an assistant could wirelessly assume this responsibility, leaving an operator free just to aim and frame?

Phase detection first appeared in mirror-shutter cameras. Without getting deep into the details, it initially required a secondary mirror or half-silvered mirror (called a pellicle) to divert the incoming image to a secondary sensor dedicated to focusing. Take a peek inside a current Sony A-mount camera like the Alpha a99 II and you’ll see a fixed pellicle for this purpose. (Sony’s LAEA4 A-mount to E-mount adapter contains an identical pellicle, bestowing fast phase-detection autofocus upon Sony E-mount digital video cameras adapted to using A-mount autofocus lenses. Must be tried to appreciate how very fast and reliable it is.)

Other phase-detection designs exist, including sensors that scatter dedicated phase-detection pixels amongst image pixels, and Sony’s approach, hybrid AF that blend strengths of phase detection and contrast detection. (Apple’s iPhone “Focus Pixels” are courtesy of Sony.) Whatever the approach, phase-detection autofocus knows instantly if the focus is in front of, or behind, the subject before it triggers a focus adjustment. Compare this to conventional contrast-detection autofocus, which stumbles in low light and poor contrast, hunting back and forth while seeking optimal focus.

To be clear, phase-detection autofocus always requires use of an autofocus lens with linear or ultrasonic motors, ideally silent, to continuously shift internal focusing elements. Thus it can never replace the conventional focus-pulling of premium mechanical cine lenses from Cooke or Zeiss. But it’s damn handy at times, and can sometimes achieve results that would be otherwise impractical. (This, from personal experience.) It’s not a stretch to predict that in the future, all professional camera systems will incorporate some version of phase-detection autofocus or focus tracking as an aid. Why not?

The second hurdle to wide use of full-frame CMOS sensors in motion pictures – rolling shutter – is the more challenging of the two. The solutions are 1) turbocharging the rate at which sensor pixel data is read out from top to bottom, to minimize the skewing effect, or 2) using a global shutter, like the optional sensor in Canon’s C700, in which all photosites are active in the same instant, then switched off at once, with pixel charges read out during a brief blanking period before the next frame is taken. However most CMOS sensor designs avoid electronic global shutters, because they are complex and they degrade ISO, typically a full stop or more.

Real-world examples of turbocharging – fast-clocking of CMOS readout times and data processing, adding more parallel output ports – are found in recent Sony cameras like the FS5 and a6500, both of which contain wicked-fast S35/APS-C sensors and ASIC processors dedicated to this purpose. Proof that this approach works well in full-frame motion picture cameras includes the full-frame RED Dragon 8K VV CMOS sensor found in both RED Weapon 8K VV and Panavision 8K Millennium DXL (discussed below), and the larger 6.5K A3X CMOS in the ARRI Alexa 65 – all rolling shutter designs.

(Think of Alexa 65’s huge sensor as three open-gate Alexa ALEV III sensors stacked one atop the other, stitched together, then rotated 90° sideways. Hence “A3X.” Cinerama in a single camera, if you get my reference.)

Global shutters remain uncommon. Sony’s F55 arrived with an electronic global shutter, as did AJA’s low-cost CION. Blackmagic Design initially introduced URSA Mini 4.6K with a global shutter option, then was unable to deliver for image quality reasons and abandoned the option. An earlier URSA Mini 4K with global shutter is still available, with three stops less dynamic range compared to the Mini 4.6K.

All of which is to say, despite these hurdles, I think it’s high time for an affordable full-frame cine camera – and if Panasonic at Cine Gear Expo doesn’t unveil a full-frame camera first, I think there’s an even chance that Sony will later this year. Sony has just set the photography world ablaze with its new E-mount flagship, the A9, built around the world’s first full-frame stacked CMOS, a 24.2 megapixel backside-illuminated Exmor RS sensor that accelerates data processing by a factor of 20 compared to previous Sony mirrorless cameras.

“Backside-illuminated” paradoxically means that enlarged photodiodes take up all the real estate on the CMOS sensor’s top layer where the image is formed, forcing all circuitry to be placed underneath. “Stacked” means that the photodiode layer sits atop a sandwich of imager components. Directly below the photodiode layer is an image processing layer, which in turn is bonded to a layer below it containing dynamic RAM for buffering. This ultra-compact architecture – a miniaturized mash-up of sensor + image processor + RAM, previously found in smartphones – increases speed of image capture, focus calculation, exposure control, and object tracking.

The A9 is so blazing fast, it takes RAW stills at 20fps! Did I mention hybrid phase-detection autofocus and 5-axis in-body stabilization? Now dwell on the fact that the A9 uses its entire full-frame sensor to capture 6K video without pixel binning, downsamples the result to 4K (3840 x 2160) to suppress moiré from aliasing, then records clean 4K to 100 Mbps XAVC S.

How is all this hot new Sony tech not coming soon to a digital cinema camera near you? Candidate platforms for full-frame treatment might include the F5/F55 or compact FS5. The fact that Sony’s E-mount accommodates both S35 and full frame tilts the prospect of a full-frame sensor towards the FS5, since the F5/F55 doesn’t accept E-mount. (At least not yet.) I’d think any Sony full-frame cine camera would want to exploit Sony’s rapidly growing family of class-leading FE lenses (F = full, E = E-mount), especially the well-regarded G series.

While speculating about the possibilities of a full-frame digital cinema camera from Panasonic or Sony and examining obstacles like focus and rolling shutter, we haven’t really addressed the question of why we’d want a full-frame cine camera in the first place. Turns out, there are compelling advantages beyond a wider field-of-view.

My little Sony a7S is a full-frame camera with a 4K sensor: 4240 x 2160 = 12 megapixels. This is a puny pixel count by today’s digital photography standards, but this low pixel count translates into an array of giant photodiodes across the sensor’s expanse, which is key to the a7S’s low-light and low-noise renown. Larger photodiodes always bring improvements to dynamic range.

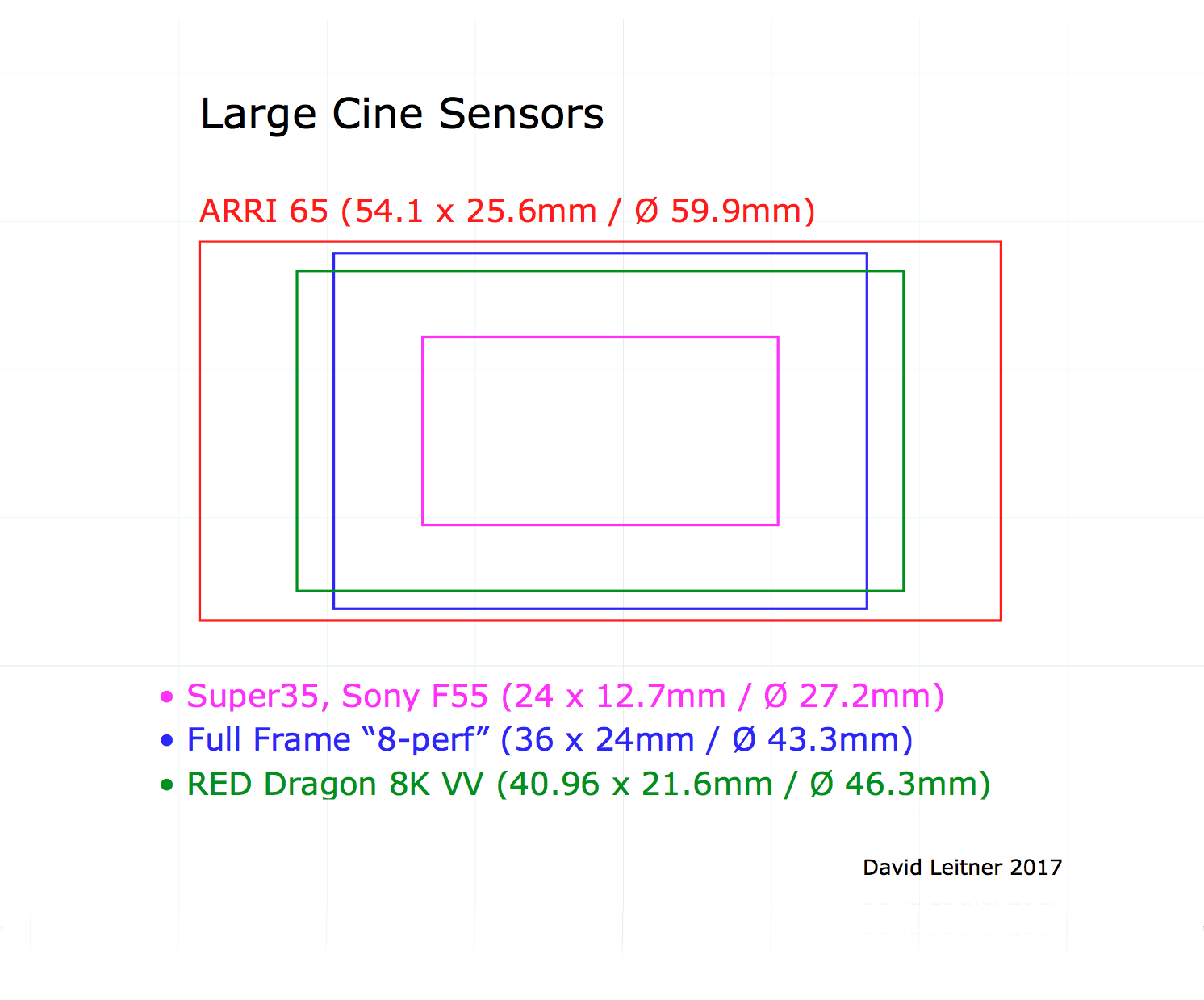

Michael Cioni, founder and president of digital post powerhouse Light Iron, articulates another advantage to full-frame sensors. He discovered in his testing of the RED Weapon 8K (see illustration of Large Cine Sensors above) that downsampling the original 8K to 4K produced a noticeably “smoother” image. This stems, to a not insignificant degree, from the fact that the Bayer color filter array used in virtually every CMOS sensor acts as a checkerboard of discrete red, green, and blue samples. Each one of the 8K picture elements (“pix-els”) is filtered for red, green, or blue only. None can detect more than one color value. The job of “deBayering” is to sample adjacent pixels and thereby synthesize an R, G, B mix for each individual pixel. But it can only ever be guesswork.

You can see, in the Bayer filter illustration below, that there are always twice as many green pixels as red or blue. So what does it mean to describe a Bayer sensor’s horizontal resolution as 8K? Depending on the row, there are either 4K green and 4K blue pixels, or 4K green and 4K red pixels. Only when the entire image is downconverted from 8K to 4K can each tiny quadrant of two green + one red + one blue form, in effect, a single 2 x 2 super pixel, each with a full R,G,G, B sampling.

Only this downsampled result, the byproduct of 8K image capture with a Bayer color filter array, can truly be said to achieve full 4K resolution — no interpolation or deBayering necessary.

Remarked Cioni in a blog from early last year, “Super Sensors are camera systems like Alexa 65 or Weapon 8K that are … able to create a new level of smoothness that makes things look more like a photograph and less like a digital representation of film. Ansel Adams shot large format and no one has ever said, His images look too sharp! On the contrary, Adams’ images look smoother, cleaner, and multi-dimensional because they were super samples.”

Cioni should know. He led the team that Frankensteined the new Panavision 8K Millennium DXL into existence, cobbling together RED’s full-frame 35.5 megapixel Dragon 8K VV CMOS sensor, Light Iron’s color science, and Panavision’s deep camera design. (Light Iron is a wholly-owned subsidiary of Panavision.)

Introduced at last year’s Cine Gear Expo, the 10-pound Millennium DXL was the surprise hit of the show. It provides Panavision, going forward, with a unique, versatile and future-proof platform for its rental-only business. It signals the start of a shift in Hollywood’s sphere of production towards even larger CMOS sensors and the versatility in format they provide.

For example, mounted on a Millennium DXL, Panavision’s new T Series of 2x anamorphic lenses will deliver an image matching in size the standard anamorphic ‘Scope camera aperture (22 x 18.6mm), although windowed from the center of the larger, wider 17:9 Dragon 8K VV sensor (40.96 x 21.6mm). This gives Panavision bragging rights to the first true 4K anamorphic images (4.4K actually, and Bayer pixels mind you) – that is, if you disqualify ARRI Alexa’s Open Gate mode (21.20 x 17.74mm), with its mere 2,570 horizontal pixels.

Indeed, it is the industry’s embrace of the full RED Dragon 8K VV sensor and coming full-frame sensors like it that will leave the biggest stamp on the look of 21st century Cinema. As you can see in the Large Cine Sensors comparison above, the diagonal, or image circle (a/k/a image diameter) needed to cover the Dragon 8K VV sensor is 46.3mm, a scant three millimeters greater than 8-perf full-frame. At NAB, no less than hallowed Cooke announced an entirely new lens series, S7/i, with an image circle of 46.3mm.

See the writing on the wall?

It’s no small irony that what low-budget DIY filmmakers pioneered by shooting full-frame HD with their Canon 5D Mark IIs nine years ago is bearing fruit today with the emergence of affordable full-frame 4K cameras – Sony a7S II, A9 – and full-frame 8K cameras – RED Weapon 8K VV, Panavision Millennium 8K – with more choices imminent. In fact, it’s quite possible that today’s full-frame and S35/APS-C will become the 35mm and 16mm of tomorrow. I didn’t invent this notion. I have already heard others in the industry saying it.

Besides Cooke, there already exist geared, cine-style primes and zooms (non-anamorphic) that cover the full-frame format –from Angénieux, Bokkeh, Bower, Canon (all Cinema lenses), GL Optics, IB/E, Leica, Samyang/Rokinon, Schneider, Sigma, Sony, Tokina, SLR Magic, Zeiss (new CP.3 third-generation Compact Primes), and others.

And let’s not overlook ARRI Alexa 65’s “8-perf 35mm” crop mode (4320 x 2880), which also delivers 4K full frame. I guess this mode might come in handy if you didn’t have enough medium-format lenses to cover the full Alexa 65 “open gate” (6560 x 3100). Fortunately, there’s been a recent surge in sets of these larger-format lenses too. At first, in 2014, when Alexa 65 debuted, there were only ARRI Vintage 765 (Hasselblad/Zeiss) and anamorphic Ultra Panavision 70 sets of primes. New sets now include Leica’s Thalia primes and ARRI’s own Prime 65 (Hasselblad HC), Prime 65 S (faster), and Prime DNA, all in the larger Alexa 65 XPL mount.

At the same time, a renaissance is underway in both 2x and 1.3x anamorphic lenses, with new primes and zooms from Angénieux, Cooke, ARRI/Zeiss, Servicevision (Scorpio brand), Vantage (Hawk brand), and SLR Magic, complementing the many existing sets of classic Panavision anamorphics. SLR Magic at NAB even introduced a $499 1.3x anamorphic adapter that mounts to the front of compact autofocus lenses with 52mm threads.

This is why many characterized NAB 2017 as the year of glass.

So, when will we also see S35 cameras with 35.5 megapixels needed for 8K? There’s a lot of cinema glass already out there for the S35 format…

Six years ago the first 8K S35 camera, Sony’s F65, arrived with a mechanical rotary global shutter and diamond-shaped pixels instead of Bayer checkerboard. Described in Sony’s literature as bringing “an unprecedented 20 megapixels,” it was intended mainly for 4K capture, with 4K of green pixels but half that count of red and blue (analogous to video 4:2:2 color subsampling). The F65’s 8K output was an interpolated result. A perennial topic of buzz at NAB and beyond has been, “is Sony going to show an F65 replacement this year?”

Meanwhile, RED last summer announced a new S35 Helium 8K sensor with the same 35.4 pixel count (8192 x 4320) and 16.5-stop dynamic range as the larger Dragon 8K VV sensor. Basically its pixels got shrunk from 5 to 3.65 microns. Both sensors occupy the same advanced carbon fiber Weapon body. The RED Weapon 8K S35 with the smaller Helium sensor began shipping last October. An upgrade path to the Dragon 8K VV sensor is planned.

As is the case at NAB every year, there is considerably more going on in the world of cameras than what I can possibly attempt to cover in this annual camera round-up. For instance I haven’t mentioned ARRI’s amazing new Alexa SXT W, a completely wireless camera that will replace the SXT Plus and SXT Studio models. It sends, with no delay, uncompressed HD with audio and timecode for wireless monitoring. It incorporates a Wi-Fi transmitter for wireless color management and more, and it provides a third wireless system for camera control, including lens, focus, iris, and zoom.

I also haven’t mentioned the turbulence surrounding competing HDR (high dynamic range) standards at NAB. Suffice it to say that since our digital motion picture cameras already capture 14-17 stops of dynamic range, this is an issue that mostly concerns display technologies. Which we hope someday will better reproduce what we routinely are able to capture today.

At the outset I mentioned that NHK is already test-broadcasting 8K Ultra-high-definition (UHD) TV by satellite daily from 10 a.m. to 6 p.m. (Who owns an 8K TV?) NHK brands it “Super Hi-Vision” for a reason. The full-featured version, if not the compressed 10-bit satellite signal, delivers 12-bit UHD at 120 fps with 77% of wide-color gamut Rec. 2020, HDR (HLG type), and 22.2 channels of audio. All this to a future 8K flat-screen in your home!

NHK plans regular 8K broadcasts in 2018, and it’s been reported that Sony intends to market 8K TVs by 2020, just in time for the 2020 Olympic games, which – you guessed it – NHK has committed to broadcast in 8K. In fact, in a trial run, NHK covered part of last year’s Rio Olympics in 8K using 4th-generation Ikegami 8K cameras with S35 CMOS sensors.

clip from the “Tokyo Girls Collection, 2015 Spring/Summer” on an 85-inch 8K LCD display.

Looks like this thing called 8K is real, folks.

Like Diogenes walking around with his lamp in search of candor, I asked repeatedly at NAB, what’s next? I intended this as a rhetorical question. Who could possibly need or want 16K, no less the category-five storm of 4x more data compared to 8K?

Blue Man Group, for one. After watching a 360-degree video of one of their loopy percussive performances from six different angles in “spherical” space, including behind an azure drummer’s ear and high up in the rafters, I asked a demonstrator what might improve the experience. Without skipping a beat, he said more resolution. Their 8K wasn’t enough for fine-grain visuals in a 360-degree spherical surround, despite stitching several 8K images together. Worse yet, he said, are those 1K-per-eye headsets!

Another person, on a panel I moderated, with expertise in 8K aerial imaging said something similar, that when working with 8K motion picture images, possessing yet even finer detail is never a drawback. It always proves useful, he said, and enables choices in enlarging and cropping, especially in VFX work.

So perhaps 4K is the new HD, and 8K will become the new 4K.

But 16K? Come the day, I may have to dive through that screen right behind Buster.

All photos and illustrations by David Leitner or courtesy of manufacturer.