Back to selection

Back to selection

How Signal Space and the VR Film Afterlife Are Moving Interactive Films Forward

Films and video games have been moving closer together for years now, including open world games that mimic cinematic storytelling and videos that include viewer input in the style of a choose-your-own-adventure novel. The mechanics of the latter have often been intrusive, however, making viewers click a link or—with the recent flowering of virtual reality—direct their gaze at an icon indicating their narrative selection. While this can result in compelling products, like the 2017 VR film Broken Night, many filmmakers in the space miss the immersion of a traditional film and want to mask the more game-like control mechanics in an increasingly seamless process.

The new film Afterlife moves us a great deal in that direction. Released on multiple VR platforms and in a 360-degree 2D version on August 21, it’s the first VR film from the Montreal-based Signal Space Lab, an audio production house now branching out into its own IPs. A live-action drama lasting about half an hour, Afterlife deals with a family’s grief after the death of their youngest child and the unexpected journeys it sends the three remaining family members on. The cinematography, by DP Juan Camilo Palacio, is crisp and easy to follow, a necessity in 360-degree shooting, which requires viewers to not only follow the story but provide input to the game engine without necessarily being aware of it. The camera team also utilized a unique rig which allowed for smooth movements, and while the resulting film is limited to three degrees of freedom, it makes the space feel less locked down, as though the viewer actually could get up and move around. (Three degrees of freedom, or 3DoF, means the point of view is fixed so the viewer has less control, but it has the advantage of compatibility with phone-based VR and lower-end headsets like the Oculus Go, while the alternative six degrees of freedom, 6DoF, allows the viewer to move around the space but requires more complex image capture methods and higher-end headsets that can track the viewer’s position.)

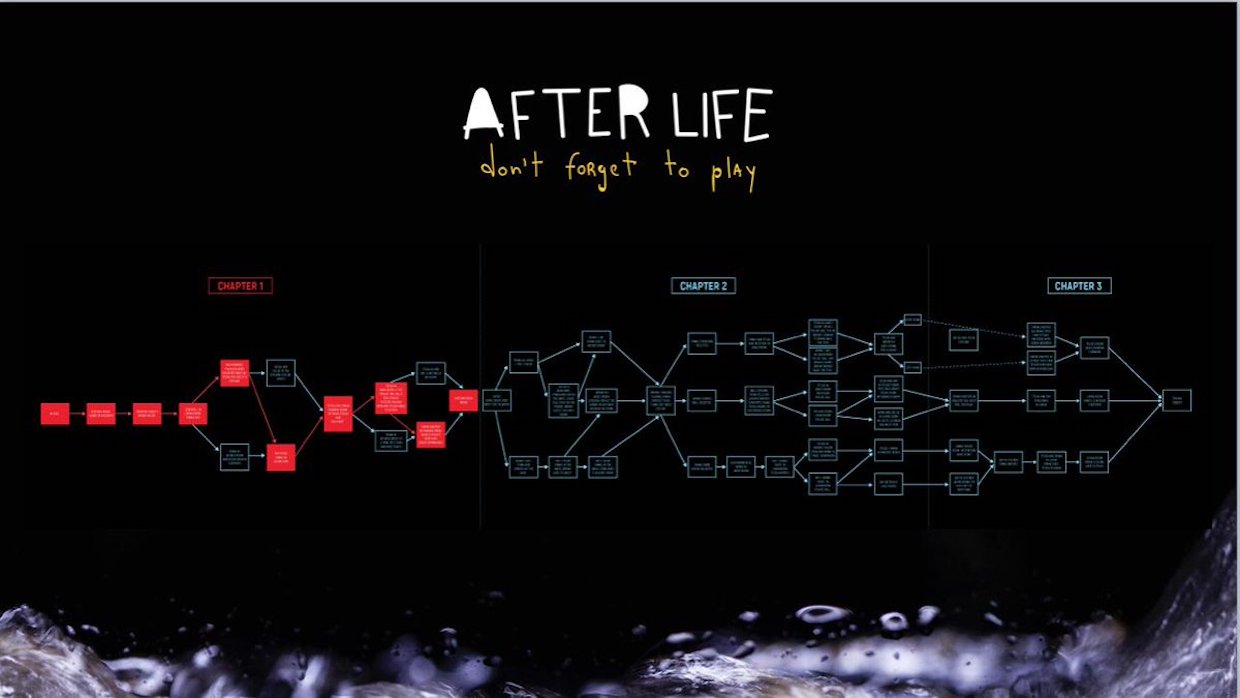

But, for me as a writer, the most interesting thing about the film is definitely how its narrative branches are packaged together to create a truly hybrid form, neither quite film nor game. On the one hand, it presents as a movie with actors, no graphic-based user interface, no hand controls, and no player-controlled character (the camera doesn’t personify a second-person perspective, for instance), while on the other the press kit still actually refers to players rather than viewers—and, of course, it was built on a game engine rather than just Premiere or Final Cut. Scene transitions occur with a fade into and out of black rather than a straight cut, but there are more choices than this–twenty-nine, in fact–and the viewer often may be unaware of a branching point. I greatly appreciated what Signal Space Labs is calling their Seamless Interactive Cinematic interface, which allows for this level of immersion, but, in a nod to gamers and others interested in the narrative construction, there are pauses between the story’s three acts that graphically lay out all the possible narrative paths with yours highlighted in red. Of course, foregrounding the story over these mechanics allows for a greater emotional response to an emotional story about grief and mourning that grew out of director Luisa Valencia’s own personal loss, and the filmmakers were wise to put all the technology at the service of the story.

Like movies before 1910, cinematic virtual reality has experienced growing pains as its technology, grammar, and style have rolled out over the past few years, often coming in as a second thought compared with the larger fields of VR games and enterprise applications. But now we’re at the cusp of a whole new field of storytelling where film, games, and immersive theater are merging into an entirely new medium, and Afterlife is just one project indicating how that will continue to play out.

Luisa Valencia and José Aguirre, Signal Space’s managing director and Afterlife‘s executive producer, answered a few of my questions about the film.

Filmmaker: Can you tell me a little about Signal Space and how you’re moving into VR?

Aguirre: As originally an audio service provider for video game companies (which we still do by the way), we were lucky enough to be exposed to the creative process of several studios around the world. We were also a bit jealous that while working on exciting projects we could not have much input on the creative aspects of the projects we were doing (with some exceptions, of course). So, it was a natural move for us to try to create our own content. VR caught us in the middle of that process (2016), and checked all the boxes we had when we wanted to find our path: it was relatively new tech, involved a lot of audio know-how, and it could merge film and game development, among other things. So we went for it, and the rest is history.

It is risky to make bold moves; Afterlife is an example: the same reasons that make this piece good for some are the ones that could bring criticism from others. For example, the fact that it’s seamless could frustrate some gamers who like to see visual feedback on decisions made. Throughout the process of showcasing the piece to platform owners and high ups in the VR space, we found the same type of polarization: what makes it good for some makes it bad for some others. This is what innovative content does. It polarizes people at first, and then, when the dust settles, you might be sitting on something great, or a big flop. For us, this is our way of living, and with the help from the Canada Media Fund, and a very supportive community of Montreal developers, we get the chance to go for it and see the results. So, while we still have the chance to try our ideas and challenge ourselves creatively, we will continue to produce content for VR, console, handheld, television, or any other place where we can showcase our art.

Filmmaker: What was the writing process like for Afterlife, both creatively and technically? Did you use branching narrative software like Celtx for planning out all the storylines?

Valencia: The process is not that different from writing for two-dimensional platforms in the sense that structurally we still need a progression, an arc, a character or characters with their journeys, an engine that drives the story and keeps it moving forward. I guess the difference comes in the design. We started the writing process with what we can call a traditional branching tree diagram or map. It became our skeleton to which we start adding flesh. For layout purposes, we used Lucidchart. The writing happened in traditional scriptwriting software.

Filmmaker: I imagine the branching narrative also affected the production process. What was your shooting ratio, and how much footage total is there for the finished film?

Valencia: We shot an average of three to six scenes per day, depending on their complexity. In some cases, like when we were using camera motion, the ratio was reduced to one scene per day. In Afterlife as a whole, we have close to two hours of footage for the whole experience, including all branching narratives. However, a single person only experiences from 30-40 minutes of content depending on the path they take.

Filmmaker: On that note, what type of camera or sound equipment did you use for the filming? Were there any challenges or surprising successes in that area?

Valencia: We used a regular 360 stereoscopic camera and a set of Ambeo VR mics for the ambiance with lavalier microphones on the actors. Challenges, all of them! And as a surprising success, we ended up with a smooth, organic, and almost imperceptible camera movement that we achieved with an in-house custom-made camera crane, specially designed to allow 6DoF movement on an indoor set.

Filmmaker: The most innovative thing about Afterlife may be the points where the narrative branches, which as we mentioned are less obtrusive and more seamless than in similar films in the past. How were those designed and executed? What will the finished experience be like for viewers in that sense?

Valencia: The branching was designed after establishing the dramatic structure of a regular linear narrative. We took it as the base and we began to add layers on the top and bottom of it like a lasagna. For the execution, the continuity was super challenging as we were not shooting by branches. So we designed a map that we followed rigorously until the end of the process where the puzzle got assembled in the game engine. Viewers will experience each chapter as a linear story and will land at the end in a map that shows them the path they have taken and the other possibilities that could have been explored. As the navigation happens in an invisible way, we hope that people will be curious about how and at what moments the story was bifurcated so that they feel inspired to play it again and hopefully land in a different ending.

Filmmaker: Are you able to talk about monetization and distribution, not just for Afterlife but for further films going forward?

Aguirre: Our strategy is to make the piece accessible in as many platforms as possible: We are currently offering Afterlife as a premium VR product on Viveport, Steam, PSVR, and Oculus stores. The launch price for it is $5.99. We are also offering a handheld version (360 interactive) on the App Store for $2.99. Afterlife can also be accessed for free though Viveport Infinity. Finally, we will distribute Afterlife through SynthesisVR for the LBE market. We will assess the success of this strategy and adapt for the next ones accordingly.